bomonike

Overview

- Why Terraform?

- Topics

- Secure Learning Tools and Ecosystem

- Terraform Usage Workflow Stages, Automated

- 1) Install base tools/utilities

- 2) Task Template to Install Utilities Locally

- 4) Obtain cloud credentials and network preferences

- 5) Get sample Terraform code

- AWS

- The calling shell script

- Add-on for Consul

- Azure

- 4) Terraform project conventions

- 6) Code cloud resources in HCL

- Links to Certification Exam Objectives

- Terraform vs. AWS Cloud Formation

- Installation options

- Configure Terraform logging

- Install Utilities

- Issues to look for

- Install Security Scanners

- Known-bad IaC for training

- Standard Files and Folders Structure

- variables.tf (vars.tf)

- main.tf

- Multi-cloud/service

- Credentials in tfvars

- .gitignore

- Upgrading Terraform version

- Reusable Modules

- Testing Terraform

- terraform init

- Terraform CLI Commands

- Terraform show

- Terraform apply

- Saving tfstate in S3 Backend

- Workspaces

- VPC Security Group

- Provisioners

- CDK for Terraform

- Create SSH key pair

- Atlantis on Terraform

- Social

- Rock Stars

- Tutorials

- References

- Configuration

- Identify versions

- Terraform tools

- References

- More on DevOps

This tutorial is a step-by-step hands-on deep yet succinct introduction to learn to use HashiCorp’s Terraform to build, change, and version resources running in multiple cloud platforms. The sequence of topics has been carefully arranged for quicker learning, based on various tutorials on this topic.

NOTE: Content here are my personal opinions, and not intended to represent any employer (past or present). “PROTIP:” here highlight information I haven’t seen elsewhere on the internet because it is hard-won, little-know but significant facts based on my personal research and experience.

Why Terraform?

terraform.io (HashiCorp’s marketing home page) says the product is a “tool for building, changing, and versioning infrastructure safely and efficiently”.

“Terraform makes infrastructure provisioning: Repeatable. Versioned. Documented. Automated. Testable. Shareable.”

Repeatable from versioning: Terraform provides a single consistent set of commands and workflow on all clouds. That is “future proofing” infastructure work.

Use of version-controlled configuration files in an elastic cloud means that the infrastructure Terraform creates can be treated as disposable. This is a powerful concept. Parallel production-like environments can now be created easily (without ordering hardware) temporarily for experimentation, testing, and redundancy for High Availability.

Topics

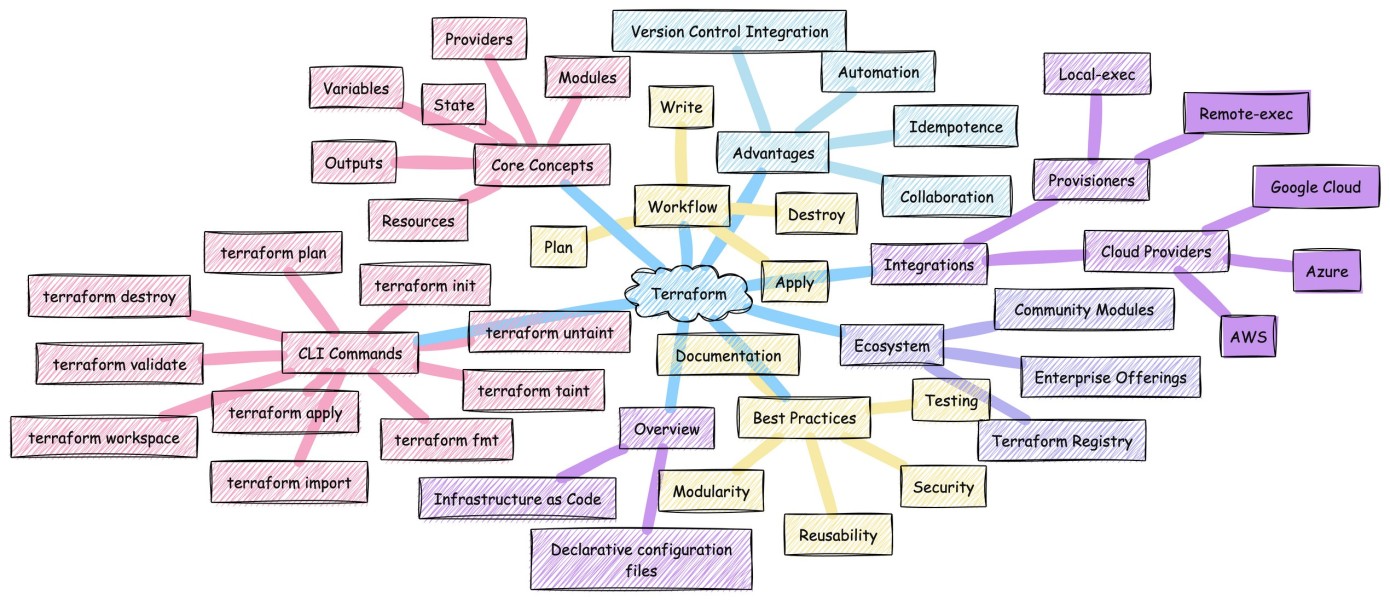

In this diagram by Prashant Lakhera (Fremont, CA):

Secure Learning Tools and Ecosystem

Here is my proposal to ensure that cloud resources are secure when created, the first time and every time.

Although Terraform works on multiple clouds, to simplify the explanation here, we’ll focus on AWS for now.

Resources in AWS can be created and managed using several tools: manually using the AWS Management Console GUI or manually invoking on a Terminal running CLI (Command Line Interface) shell scripts or programs written to issue REST API calls. But many enterpise AWS users avoid using GUI and CLI and instead use an approach that provides versioning of Configuration as Code (IaC) in GitHub repositories, so you can go from dev to qa to stage to prod more quickly and securely.

Although AWS provides their own Cloud Formation language to describe what to provision in AWS, for various reasons, many prefer Terraform. Terraform files are commonly run within an automated CI/CD pipeline so that it is repeatable. Having configurations documented in GitHub enables drift detection which identifies differences between what is defined versus what is actually running.

The AWS Config service logs every change in configuration of resources. The AWS Security Hub service looks in logs for vulnerabilities to issue Findings based on its own “AWS Foundations” set of policies. AWS provides a webpage of recommendations for remediation, but only by using its own GUI or CloudFormation code, not Terraform coding.

More importantly, findings from AWS are raised for resource which have already been manifested on the internet, and thus vulnerable to public attack.

In today’s hostile internet, we can’t risk an incremental approach to achieving the security needed. We really need to achieve full “security maturity” in our Terraform code the first time we deploy it onto the internet.

PROTIP: We prevent vulnerabilities

Several vendors have created static scan programs. Checkov and TFSec have an interface to the popular VSCode text editor on laptops, which “shifts left” the work of security earlier in the development lifecycle.

The crucial skill needed today is expertise at manually editing Terraform files which are “bulletproof”.

One way to climb this steep learning curve is learning to learn known-good and fix known-bad sample Terraform code which are accompanied with policies used to detect violations. It’s even better to have each policy be associated with recommendations for remediating the Terraform code, along with tutorials about configuration options.

Because cloud services change all the time, a policy creator helps to keep up with all the polices needed. In the Terraform Cloud, policies are defined in the Sentinel language. Other vendors define policies in the Rego language processed by the OPA engine.

When a community of Terraform developers have policies which attest that Terraform code is known good, their templates can be Shareable and thus reduce both risk and much effort by others.

PROTIP: This approach is essentially TDD (Test Driven Development) applied to infrastructure code.

Atlantis provides a mechanism like GitHub Dependabot, which automatically creates Pull Requests containing remediations. Terraform Cloud provides a GUI to display them.

So here it is, our ecosystem your you to create secure Terraform, the first time and every time.

Recap

Terraform Usage Workflow Stages, Automated

PROTIP: Here is how to get started, from scratch, the quickest (and safest) way with the most automation:

- Install base tools/utilities locally on your mac.

- Use the GitHub Template to create your repo and use Task to install tools/utilities locally.

- Obtain sample Terraform code (from GitHub or Terraform.io module registry).

-

Obtain cloud credentials, network CIDR subnet definitions, and other preferences for your region(s) in AWS, Azure, GCP, etc., securely saved to and retrieved from a secure secrets vault.

- Define your Terraform project’s folders and files.

-

Code resources in HCL-formatted .tf files.

-

Use GitHub Actions to automatically kick off a CI/CD run instead of typing ad-hoc CLI commands to test Terraform.

- If defined, provisioners for remote-exec and local-exec (such as Ansible) are run on servers to configure their processes.

- Optionally, Generate a Dependency Graph for visualization.

- Identify security issues running in the cloud (using AWS Config, etc.).

- Perform tuning using Densify for Finops, etc.

Among Terraform usage workflow stages:

1) Install base tools/utilities

- In a Terminal, if you haven’t already, install Homebrew (see https://brew.sh).

-

Use Homebrew to install base tools/utilities:

brew install jq, tree, git brew install go-task/tap/go-task # https://taskfile.dev/

brew install --cask visual-studio-code

-

If you prefer using Python, there is a Python module to provide a wrapper of terraform command line tool at https://github.com/beelit94/python-terraform

CLI Keyboard aliases

-

To save time typing Ad hoc Terraform CLI commands, define keyboard aliases in a shell file such as my .aliases.zsh :

alias tf="terraform $1" # provide a parameter alias tfa="terraform apply -auto-approve" alias tfd="terraform destroy" alias tffd="terraform fmt -diff" alias tfi="terraform init" alias tfp="terraform plan" alias tfr="terraform refresh" alias tfs="terraform show" alias tfsl="terraform state list" alias tfsp="terraform state pull" alias tfv="terraform validate"

Shell files to call

-

PROTIP: To save yourself typing (and typos), define a shell file to invoke each different pipeline:

chmod +x abc-dev-fe.sh abc-dev-fe.sh

chmod +x abc-stage-fe.sh abc-stage-fe.sh

tfvars & override precedence

Terraform provides different mechanisms for obtaining dynamic values.

When troubleshooting, REMEMBER: the order of precedence*

-

Environment variables defined in shell files are overridden by all other ways of specifying data:

export TFVAR_filename="/.../abc-stage.txt"

Alternately, specify a value for the variable “env” (abbreviation for environment) after prefix TF_VAR_:

TF_VAR_env=staging

CAUTION: It’s best to avoid using enviornment variables to store secrets because other programs can read snoop in memory. When using environment variables to set sensitive values, those values remain in your environment and command-line history.

-

Within terraform.tfvars

-

Within terraform.tfvars.json

-

Within *.auto.tfvars (in alphabetical order)

filename = "/root/something.txt"

-

Command-line flags -var or -var-file overrides all other techniques of providing values:

terraform apply -var "filename=/.../xxx-staging.txt"

Values for variables can be specified at run-time using variables names starting with “TF_VAR_”, such as:

But unlike other systems, environment variables have less precedence than -var-file and -var definitions, followed by automatic variable files.

Among Terraform usage workflow stages:

2) Task Template to Install Utilities Locally

PROTIP: Several utilities are needed to ensure the correctness and security of each type of file used. Using the latest version may not result in all of them working well together. So the multi-talented Kalen Arndt created a GitHub template that automatically installs versions of utilities your Mac needs which he has validated. His template makes use of Task (an improvement over Linux Make, but written in Go) and adsf.

-

In a browser go to:

- Click “Uses this template” and “Create a new repository”.

- Click “Select an owner” and one of your accounts (which I call your_acct below).

- Type a Repository name (which I call your_repo_name below)

-

Click the green “Create repository from template”.

In a Terminal app:

-

Construct a command to download the repo you created above:

clone git clone git@github.com:your_acct/your_repo_name.git cd your_repo_name

PROTIP: Pick a name with the most important keywords first.

-

File .tool-versions specifies current versions of each tool/utility installed.

-

terraform-docs - Generate documentation from Terraform modules in various output formats

-

tfupdate - Update version constraints in your Terraform configurations

-

checkov - Prevent cloud misconfigurations and find vulnerabilities during build-time in infrastructure as code, container images and open source packages with Checkov by Bridgecrew (owned by Prisma Cloud).

-

tfsec - Security scanner for your Terraform code. TODO: Use other scanners as well?

-

pre-commit - A framework for managing and maintaining multi-language git pre-commit hooks (that automates actions).

-

python - The Python programming language [See my tutorial on Python]

-

shfmt - A shell parser, formatter, and interpreter with bash support; includes shfmt

-

shellcheck - ShellCheck, a static analysis tool for shell scripts

-

vault - A tool for secrets management, encryption as a service, and privileged access management

-

TODO: Install awscli, kubectl, etc. for Blueprints (below)

- But rather than occassionally checking manually, Kalen updates each version based on GitHub issue such as this created automatically by the Renovate dependency checker. The “renovate” utility automates update of 3rd-party dependencies (Multi-platform and multi-language) via pull requests. It is configured by preset “extends” (like ESLint).

References:

- https://docs.renovatebot.com/

- https://www.mend.io/free-developer-tools/renovate/

- https://www.augmentedmind.de/2021/07/25/renovate-bot-cheat-sheet/

- https://blog.logrocket.com/renovate-dependency-updates-on-steroids/

-

FYI: Whether settings define whether pre-commit and asdf are enabled is specified in the renovate.json file within folder .github.

-

Install the tools/utilities on your laptop as defined in the .tools-versions file described above:

task init

This command runs the Taskfile.yaml.

Notice that to add a utility, both the Taskfile.yaml and .tool-versions files need to be edited.

Note that Task invokes ASDF, which provides a single CLI tool and command interface to manage the install of multiple versions of each project runtime. [Intro doc]

asdf is used instead of switching among different versions of Terraform using tfenv or the little-known Homebrew pin and switch commands pointing to different git commits.

-

Get to know the .vscode/extensions.json file listing extensions Kalen likes to be installed in Visual Studio Code:

- pjmiravalle.terraform-advanced-syntax-highlighting

- editorconfig.editorconfig

- oderwat.indent-rainbow

- yzhang.markdown-all-in-one

- davidanson.vscode-markdownlint

- mohsen1.prettify-json

- run-at-scale.terraform-doc-snippets

- gruntfuggly.todo-tree

- redhat.vscode-yaml

- vscode-icons-team.vscode-icons

- shd101wyy.markdown-preview-enhanced

-

FYI: The .editorconfig file defines (for each type of file) the indents and other specifications Visual Studio Code should use to enforce consistent formatting.

-

View pre-commit actions defined in .pre-commit-config.yaml to verify the version numbers:

-

https://github.com/pre-commit/pre-commit-hooks/releases/

-

https://github.com/antonbabenko/pre-commit-terraform/releases/

-

https://github.com/syntaqx/git-hooks/releases/

-

-

QUESTION: Within the .github/workflows folder is the push-tf-registry.yml file which defines GitHub Actions to “Release to terraform public registry” specific SHA’s.

renovate chore(deps): pin dependencies

Among Terraform usage workflow stages:

4) Obtain cloud credentials and network preferences

Running my script to defined keyboard aliases enables you to issue on Terminal:

awscreds

That would invoke your favorite editor to edit ~/.aws/credentials.

Alternately, you can

aws configure

to specify:

AWS Access Key ID [****************MHQJ]: AWS Secret Access Key [****************CXH7]: Default region name [us-east-1]: Default output format [json]:

Among Terraform usage workflow stages:

5) Get sample Terraform code

PROTIP: It’s too dangerous to start from scratch because misconfigurations can cost large cloud bills and leak valuable data. So we need to help each other on a collaborative mutual “known-secure” platform.

PROTIP: Begin with your cloud vendor selection. Going directly to a Kubernetes cloud service is the least time-consuming approach. But that costs more money.

| Cloud | VMs | Container | K8s |

|---|---|---|---|

| AWS: | EC2 | ECS | EKS |

| Azure: | AVM | ACS | AKS |

| GCP: | GCE | GCS | GKE |

Comparisons:

- https://learn.boltops.com/curriculums/aws-and-terraform/courses/aws-eks-kubernetes/lessons/aws-eks-vs-azure-aks-vs-google-gke

- https://github.com/boltops-learn (private repos by invitation)

Control Plane pricing: AKS is free. GKE is free for one zonal cluster, then $72/month. Pricing for EKS alone is $73/month for each cluster in us-west-2 (0.10 USD per hour x 730 hours per month).

Difficulty: click-button GUI makes AKS and GKE the easiest to setup.

There’s also NKS (Naver Kuernetes Service).

Terraform Kubernetes

PROTIP: Kubernetes has a lot of “knobs”. There is a lot to configure. So we would like to have a “starter set” of versioned Infrastructure and Code (IaC) in Terraform to create a Baseline environment containing various add-ons typically added to Kubernetes which, ideally, contain the security controls needed to be “production-worthy”, but be brought up quickly for further customization.

Use of Kubernetes accelerates time to market for platform initiatives through the Separation of Concerns - Platform Teams vs Application Teams:

Platform teams build the tools that provision, manage, and secure the underlying infrastructure while application teams are free to focus on building the applications that deliver business value to customers. It also gives operators more control in making sure production applications are secure, compliant, and highly available. Platform teams have full control to define standards on security, software delivery, monitoring, and networking that must be used across all applications deployed.

This allows developers to be more productive because they don’t have to configure and manage the underlying cloud resources themselves. Application teams need to focus on writing code and quickly shipping product, but there must be certain standards that are uniform across all production applications to make them secure, compliant, and highly available.

My blog on Kubernetes describes these advantages of using Kubernetes:

- Resiliency (auto-restart nodes that fail)

- Imposition of a shared operational workflow using common software development lifecycle (SDLC), common management API Deployment velocity that can be better supported by a central team of experts

- Achieve resource utilization density

Bear in mind that Kubernetes is not magic:

- Nodes can take 15 seconds to start, so overprivisioning is necessary

- Clusters run all time even when there is no traffic

Docs on Terraform Kubernetes:

- https://registry.terraform.io/providers/hashicorp/kubernetes/latest/docs

- https://registry.terraform.io/providers/hashicorp/kubernetes/latest/docs/guides/getting-started

- https://kubernetes.io/blog/2020/06/working-with-terraform-and-kubernetes/

- https://opensource.com/article/20/7/terraform-kubernetes

-

https://unofficial-kubernetes.readthedocs.io/en/latest/user-guide/kubectl-overview/

-

VIDEO: Terraforming the Kubernetes Land Oct 13, 2017 by Radek Simko (@RadekSimko)

- https://logz.io/blog/kubernetes-as-a-service-gke-aks-eks/

AWS

The AWS Partner Solutions website has “Quick Starts” of IaC code. A search of for “terraform” include:

- Terraform modules

- Amazon VPC for Terraform on AWS Provisions Amazon Virtual Private Cloud (Amazon VPC) resources managed by Terraform on the Amazon Web Services (AWS) Cloud.

- https://github.com/aws-quickstart/quickstart-eks-hashicorp-consul

- https://aws-quickstart.github.io/quickstart-hashicorp-consul/

EC2

- https://aws.amazon.com/ec2/

ECS

- https://aws.amazon.com/ecs/

- https://aws.amazon.com/eks/faqs/

- https://developer.hashicorp.com/consul/tutorials/cloud-production/consul-ecs-hcp

- https://logz.io/blog/aws-eks-features/

- https://github.com/Capgemini/terraform-amazon-ecs/ (not updated since 2016)

EKS

- https://aws.amazon.com/eks/

- https://aws.amazon.com/blogs/aws/amazon-elastic-container-service-for-kubernetes/ from 2015

- https://github.com/clowdhaus/eks-reference-architecture

- https://github.com/terraform-aws-modules/terraform-aws-eks

- https://appfleet.com/blog/amazon-elastic-container-service-for-kubernetes-eks/

- https://www.youtube.com/watch?v=Qy2A_yJH5-o

Some “best practices” followed include:

- “EKS Best Practices Guides” A best practices guide for day 2 operations, including operational excellence, security, reliability, performance efficiency, and cost optimization. From this repo.

- “Provisioning Kubernetes clusters on AWS with Terraform and EKS” (using eksctl)

EKS can be based on AWS Fargate which manages nodes for you so you don’t have to specify server instance types. Just tell EKS how much RAM and CPU you need. (Same with GKE AutoPilot).

List commands: aws eks help

- associate-encryption-config

- associate-identity-provider-config

- create-addon

- create-cluster

- create-fargate-profile

- create-nodegroup

- delete-addon

- delete-cluster

- delete-fargate-profile

- delete-nodegroup

- deregister-cluster

- describe-addon

- describe-addon-versions

- describe-cluster

- describe-fargate-profile

- describe-identity-provider-config

- describe-nodegroup

- describe-update

- disassociate-identity-provider-config

- get-token

- help

- list-addons

- list-clusters

- list-fargate-profiles

- list-identity-provider-configs

- list-nodegroups

- list-tags-for-resource

- list-updates

- register-cluster

- tag-resource

- untag-resource

- update-addon

- update-cluster-config

- update-cluster-version

- update-kubeconfig

- update-nodegroup-config

- update-nodegroup-version

- wait

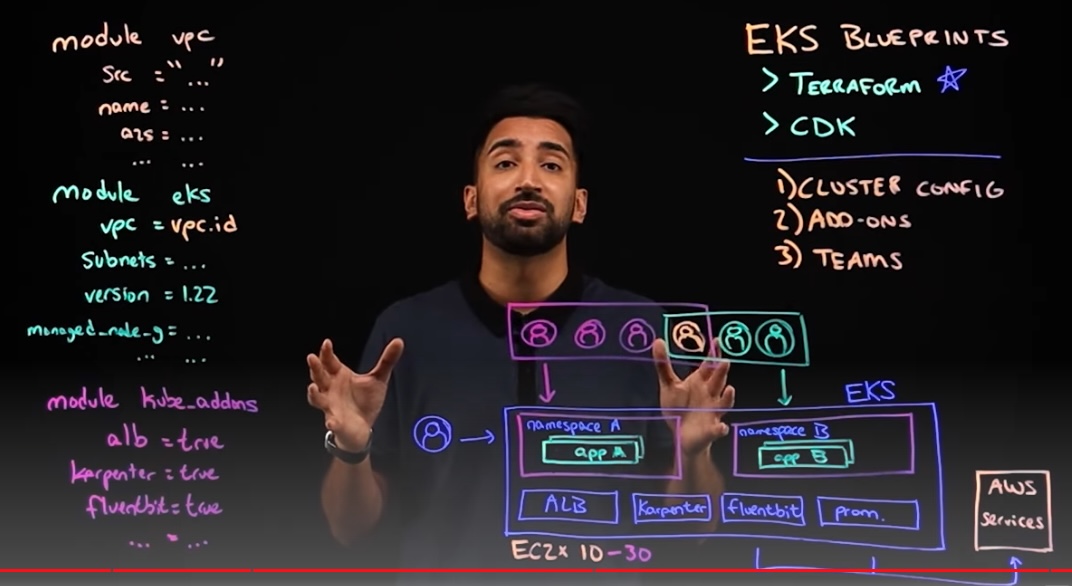

EKS Blueprints for Terraform

So I created the shell script which enables you, with one command in Terminal, to install on a Mac utilities needed to create a base set of AWS resources and various utilities installed to support a production instance of EKS. It’s a simpler local alternative than using AWS Cloud9 IDE on a Linux machine, using during delivery of Workshop Studio sessions during AWS conferences.

AWS EKS Blueprint (announced April 20, 2022) is a tool-chain platform” on top of Helm, Terraform, and ArgoCD, etc. that comes with “batteries included” a pre-configured base of Terraform IaC components to assemble the desired state of each team’s EKS environment, such as the control plane, worker nodes, and Kubernetes. Multiple teams to deploy EKS across any number of accounts and regions.

Its gh-pages branch is used to display this webpage.

PROTIP: My shell script makes use of The Blueprints repo created by the AWS IA (infrastructure and automation) team within AWS:

Blueprint add-ons

That webpage lists the dozens of add-on containers (services) that have already been integrated into the Blueprints for securing, scaling, monitoring, and operating containerized infrastructure.

Each add-on (feature) is defined as a module within this module folder.

All embedded with relevant security controls built-in.

VIDEO: “10 Must-Have Kubernetes Tools”

CLI tools:

- Local development - Rancher Desktop

- Manifests

- Operate - kubectl extensions

- https://github.com/ahmetb/kubectx to change context arn’s and k8s namespaces

- kubens

- 3rd-party apps - databases, etc. using Helm

- Observe with https://k9scli.io (Terminal + vim + k8s) https://www.youtube.com/watch?v=boaW9odvRCc

https://www.youtube.com/watch?v=wEQJi7_4V9Q

In cluster:

- Sychronize current state - ArgoCD or Flux

- Infrastructure - https://crossplane.io

- Applications definition - Instead of Helm, crossplane or KubeVela

- Metrics - Prometheus.io to collect for Grafana dashboards

- Log collection - grafana.com/oss/loki and Promtail to ship logs

- Policies (admission controllers) - Kyverno.io or open-policy-agent.github.io/gatekeeper

- Service Mesh sidecars

- TLS certificates - https://cert-manager.io use letsencrypt

https://www.youtube.com/watch?v=BII6ZY2Rnlc

Blueprint examples

Several “examples” (cluster use cases) have been defined. Each example references a particular set of modules.

From the AWS EKS Blueprints announcement:

example eks-cluster-with-new-vpc

This blog post demonstrates use of my shell script, which enables a lone individual developer/SRE/student to independently and quickly create an isolated full-featured production-candidate EKS environment, by typing a single command.

Most Kubernetes tutorials (KodeKloud, etc.) teach you about atomic kubectl and eksctl commands. You need that to pass KCAD exams. But here I describe a way to repeatedly create a production environment with all its complexities, which is the job of Kubernetes admins.

Specifically, my shell script currently automates several manual steps described at the AWS EKS Blueprints “Getting Started” page, to run Terraform in the eks-cluster-with-new-vpc folder to create a Kubernetes cluster (in one Terminal command):

- A VPC with 3 Private Subnets and 3 Public Subnets (or more if you choose)

- EKS Cluster Control plane with one managed node group

- Internet gateway for Public Subnets and NAT Gateway for Private Subnets

- Plus many possible add-ons

That is the starting point for a full-featured production-worthy environment.

PROTIP: It’s quicker and easier to run a script. Manually invoking one command at a time is time consuming and error prone. It’s too easy to skip a step, which causes errors and wasted time troubleshooting. A script ensures that steps are run in sequence. Checks can be added to make sure conditions for each command are met before, and after each command to ensure that each step achieves what was intended.

My shell script makes it quicker to test changes in Helm charts and addition of add-on for more capability in your Kubernetes environment (such as Observability, backup, etc.).

Once proven independently, changes to the IaC code base can then be confidently committed into the team GitHub repo for running within shared CI/CD infrastructure (using GitHub Actions, etc.). This enables you to say in your PR:

- Yes, I have tested the PR using my local account setup (Provide any test evidence report under Additional Notes)

Use my shell script while learning to use CI/CD SaaS operations (such as Argo CD), without begging for team access.

Let’s start by the end-product of a cluster.

k8s_nodes_pods created

To confirm what was created, run my shell script with the -v parameter:

./eks-start1.sh -v

That does the same as manually typing these Kubernetes status commands:

kubectl get nodes

should return a list such as this:

NAME STATUS ROLES AGE VERSION ip-10-0-10-190.us-west-2.compute.internal Ready <none> 9m14s v1.23.13-eks-fb459a0 ip-10-0-11-151.us-west-2.compute.internal Ready <none> 9m9s v1.23.13-eks-fb459a0 ip-10-0-12-239.us-west-2.compute.internal Ready <none> 9m15s v1.23.13-eks-fb459a0

kubectl get pods --all-namespaces

should return “Running” status for:

NAME READY amazon-cloudwatch aws-cloudwatch-metrics-8c4dl 1/1 amazon-cloudwatch aws-cloudwatch-metrics-g67tv 1/1 amazon-cloudwatch aws-cloudwatch-metrics-khz28 1/1 cert-manager cert-manager-559c84c94f-jpdlv 1/1 cert-manager cert-manager-cainjector-69cfd4dbc9-wpftq 1/1 cert-manager cert-manager-webhook-5f454c484c-j8jvl 1/1 gatekeeper-system gatekeeper-audit-9b7795dcf-gzn49 1/1 gatekeeper-system gatekeeper-controller-manager-78b8774b7c-57tt5 1/1 gatekeeper-system gatekeeper-controller-manager-78b8774b7c-b7hks 1/1 gatekeeper-system gatekeeper-controller-manager-78b8774b7c-hl2vg 1/1 kube-system aws-load-balancer-controller-854cb78798-ckcs6 1/1 kube-system aws-load-balancer-controller-854cb78798-rpmwc 1/1 kube-system aws-node-f4zxh 1/1 kube-system aws-node-gl9vt 1/1 kube-system aws-node-qg4nz 1/1 kube-system cluster-autoscaler-aws-cluster-autoscaler-7ccbf68bc9-d6hc5 1/1 kube-system cluster-proportional-autoscaler-coredns-6fcfcd685f-5spb8 1/1 kube-system coredns-57ff979f67-4nnh2 1/1 kube-system coredns-57ff979f67-q4jlj 1/1 kube-system ebs-csi-controller-79998cddcc-8fttd 6/6 kube-system ebs-csi-controller-79998cddcc-wkssp 6/6 kube-system ebs-csi-node-6pccm 3/3 kube-system ebs-csi-node-wv2jm 3/3 kube-system ebs-csi-node-xqjpp 3/3 kube-system kube-proxy-cgjsq 1/1 kube-system kube-proxy-fwmv9 1/1 kube-system kube-proxy-lt8cg 1/1 kube-system metrics-server-7d76b744cd-ztg98 1/1 kubecost kubecost-cost-analyzer-7fc46777c4-5kdjw 2/2 kubecost kubecost-kube-state-metrics-59fd4555f4-tghnt 1/1 kubecost kubecost-prometheus-node-exporter-89vg6 1/1 kubecost kubecost-prometheus-node-exporter-fll24 1/1 kubecost kubecost-prometheus-node-exporter-pjhsz 1/1 kubecost kubecost-prometheus-server-58d5cf79df-jxtgq 2/2

TODO: A diagram of resources above?

TODO: Description of what each node provides and how they communicate with each other.

The 18 nodes created under namespace “kube-system” are:

- 2 AWS Load Balancer Controllers

- 3 AWS nodes

- 1 Cluster Autoscaler

- 1 proportional autoscaler for CoreDNS

- 2 CoreDNS

- 2 EBS CSI (Container Storage Interface) controllers

- 2 EBS CSI nodes

- 3 kube-proxy nodes

- 1 Prometheus metrics server

- vpc-cni (Network Interface)

The calling shell script

PROTIP: Before running any script on your machine, a good security practice is to understand what it really does.

-

In a browser, view the script online in GitHub:

https://github.com/wilsonmar/mac-setup/blob/master/eks-start1.sh

PROTIP: A basic tenant of this script’s desig is that no action is taken unless the user specifies a parameter.

If a script is called with no parameters:

./eks-start1.sh

the script presents a menu of parameters and command examples:

=========================== 202?-??-15T15.05.50-0700 ./eks-start1.sh v0.19 PARAMETER OPTIONS: -h # show this help menu by running without any parameters -cont # continue (NOT stop) on error -v # -verbose (list rundetails to console) -vv # -very verbose (instance IDs, volumes, diagnostics, tracing)" -x # set -x to display every console command -q # -quiet headings for each step -vers # list versions released -I # -Install utilities brew, etc. (default is install) -tf "1.3.6" # version of Terraform to install -gpg # Install gpg2 utility and generate key if needed -email "johndoe@gmail.com" # to generate GPG keys for -DGB # Delete GitHub at Beginning (download again) -c # -clone again from GitHub (default uses what exists) -GFP "/Users/wilsonmar/githubs" # Folder path to install repo from GitHub -aws # -AWS cloud awscli -region "us-east-1" # region in the cloud awscli -KTD # Kubernetes Terraform Deploy -DTB # Destroy Terraform-created resources at Beginning of run -DTE # Destroy Terraform-created resources at End of run

To run the script to establish Kubernetes cluster:

time ./eks-start1.sh -v -KTD

But before you do that, let’s look at the tools and utilities that need to be installed.

Utilities to run Blueprint

To install all the utilities needed (brew, jq, git, tree, awscli, kubectl, terraform, etc.):

./eks-start1.sh -I -v

-v displays additonal verbosity.

-q quiets the headings displayed by the h2 custom-defined command.

GitHub to load Blueprints

In STEP 9, the script clones: https://github.com/aws-ia/terraform-aws-eks-blueprints

–depth 1 excludes branches such as gh-pages referenced to display website “Amazon EKS Blueprints for Terraform”. This results in the du -h command showing 26MB of disk space usage (instead of 40MB with all branches).

Run

Upon failure, the script automatically runs Cleanup terraform destroy commands (unless the script’s override parameter was specified).

export AWS_REGION=us-west-2 aws ec2 describe-availability-zones --output text --query 'AvailabilityZones[0].[RegionName]' aws configure list | grep region aws configure get region --profile $PROFILE_NAME terraform plan

PROTIP: Tfsec (and other scans of Terraform HCL) are run from the output of terraform plan.

terraform apply -target=”module.vpc” -auto

Apply complete! Resources: 23 added, 0 changed, 0 destroyed.

kubectl config view --minify -o jsonpath='{.clusters[].name}'arn:aws:eks:us-west-2:670394095681:cluster/eks-cluster-with-new-vpc%

set configure_kubectl = "aws eks --region us-west-2 update-kubeconfig --name eks-cluster-with-new-vpc" aws eks --region "$AWS_REGION" update-kubeconfig --name eks-cluster-with-new-vpc Updated context arn:aws:eks:us-west-2:670394095681:cluster/eks-cluster-with-new-vpc in /Users/wilsonmar/.kube/config

-

Configure AWS credentials. The account used should be granted a minimum set of IAM policies.

-

Download the script:

curl -s "https://raw.githubusercontent.com/wilsonmar/mac-setup/master/eks-start1.sh" --output eks-start1.sh

-

Set permissions (needed only one time):

chmod +x eks-start1.sh

-

Set your Mac to not sleep: Click the Apple logo on the top-left corner of your screen, and select System Preferences. In the upper-right, type on Battery. At the left menu, click Battery. Drag the slider to Never. Click “Power Adapter” and drag that slider to Never.

sudo systemsetup -setcomputersleep Never

-

Among Application Utilities, invoke Apple’s Activity Monitor to identify high CPU processes to close, then how much CPU and Memory is consumed by processes Terminal and “terraform”.

-

In Terminal: Run using a timer and script parameters:

time ./eks-start1.sh -v

Update your AWS credentials if you see messages like this:

│ Error: configuring Terraform AWS Provider: error validating provider credentials: error calling sts:GetCallerIdentity: operation error STS: GetCallerIdentity, https response error StatusCode: 403, RequestID: 9e49efe4-dd08-4b2c-a6df-22a754b8a04d, api error ExpiredToken: The security token included in the request is expired time outputs three timings: real, user and sys, such as:

real 1m47.363s user 2m41.318s sys 0m4.013s

- QUESTION: What is the UI that can be seen?

- QUESTION: How to access services within EKS?

-

QUESTION: AWS Config. security alerts, if any.

- Reuse configured blueprints (in GitHub) to consistently “stamp out” instances across multiple AWS accounts and Regions using continuous deployment automation.

Add-on for Consul

https://developer.hashicorp.com/consul/docs/k8s/installation/install

Blueprints are defined/added in main.tf file in each example folder.

Add-on add-ons: There is growing list of add-ons to the Blueprints include Prometheus, Karpenter, Nginx, Traefik, AWS Load Balancer Controller, Fluent Bit, Keda, ArgoCD, and Consul.

Here we example extensibility for Consul.

Each add-on is a module defined in modules/kubernetes-addons/main.tf file. For example:

module "consul" {

count = var.enable_consul ? 1 : 0

source = "./consul"

helm_config = var.consul_helm_config

manage_via_gitops = var.argocd_manage_add_ons

addon_context = local.addon_context

}

Each module has a folder, such as Consul’s modules/kubernetes-addons/consul.

Consul’s locals.tf file defines:

default_helm_config = {

name = local.name

chart = local.name

repository = "https://helm.releases.hashicorp.com"

version = "1.0.1"

namespace = local.name

create_namespace = true

description = "Consul helm Chart deployment configuration"

values = [templatefile("${path.module}/values.yaml", {})]

}

helm_config = merge(local.default_helm_config, var.helm_config)

argocd_gitops_config = {

enable = true

}

Add-ons are enabled together by specification in the modules/kubernetes-addons/locals.tf file.

argocd_addon_config = {

...

consul = var.enable_consul ? module.consul[0].argocd_gitops_config : null

The HashiCorp Consul add-on is described here in the docs [editable].

https://developer.hashicorp.com/consul/docs/k8s/installation/install

https://github.com/aws-ia/terraform-aws-eks-blueprints/blob/main/modules/kubernetes-addons/consul/README.md?plain=1 which references docs at https://developer.hashicorp.com/consul/tutorials/get-started-kubernetes/kubernetes-gs-deploy

added in modules/kubernetes-addons/main.tf:

module "consul" {

count = var.enable_consul ? 1 : 0

source = "./consul"

helm_config = var.consul_helm_config

manage_via_gitops = var.argocd_manage_add_ons

addon_context = local.addon_context

}

values.yaml specifies a 3-replica server.

The “Inputs” section in the README are coded within variables.yaml which defines “addon_context” variables.

To use GitOps, edit and change variable “manage_via_gitops” setting default = false to true. QUESTION?

Additional customizations

TODO: Add a sample application (such as HashiCups).

https://developer.hashicorp.com/consul/docs/k8s/helm

https://helm.sh/docs/intro/using_helm/#customizing-the-chart-before-installing

Additional capabilities to add:

* Deployment platform

* Deployment topology

* TLS Certificates

* Connectivity for operator and clients

* Logging

* Host monitoring

* Application telemetry

* Backups

* Restores

* Upgrades

Other add-ons

https://aws-ia.github.io/terraform-aws-eks-blueprints/main/extensibility/

You may want to try implementing other use cases in the example deployment options (“constructs”) folder not demonstrated here:

- Karpenter auto-scaler for EKS

- Grafana Loki

- Observability Grafana

- IPV6 EKS clusters

- Analytics clusters with Spark or EMR on EKS

PROTIP: Add-ons can be both open-source or licensed.

Modules include:

- aws-eks-fargate-profiles

- aws-eks-managed-node-groups

- aws-eks-self-managed-node-groups

- aws-eks-teams

- aws-kms

- emr-on-eks

- irsa

- kubernetes-addons

- launch-templates

Process Helm charts to configure Kubernetes using CNCF GitOps tool ArgoCD:

https://catalog.workshops.aws/eks-blueprints-terraform/en-US

https://github.com/aws-ia/terraform-aws-eks-blueprints/tree/main/examples

https://developer.hashicorp.com/consul/docs/k8s/installation/install

https://github.com/hashicorp/terraform-aws-consul-ent-k8s

More

https://github.com/kalenarndt/terraform-vault-consul-k8s-integration from Kalen is a module that builds the Root CA, Server TLS Intermediate, Consul Connect Intermediate, Connect Inject Intermediate, Controller Intermediate, KV Secrets Engine, Bootstrap Tokens, Gossip Tokens, Consul Licenses, Vault Policies, Kubernetes Roles for authentication with the policies associated, and outputs a sample Helm values file.

Blueprints for Terraform is open-sourced two ways, in different repos and workshops:

- EKS Blueprints for Terraform (below)

-

EKS Blueprints for CDK workshop at https://catalog.workshops.aws/

- EKS Blueprints for CDK workshop at https://catalog.workshops.aws/eks-blueprints-for-cdk/en-US

- https://github.com/aws-quickstart/cdk-eks-blueprints

- https://www.npmjs.com/package/@aws-quickstart/eks-blueprints NPM module

- https://github.com/aws-samples/cdk-eks-blueprints-patterns

- https://github.com/aws-samples/eks-blueprints-workloads

Azure

AVM

https://azure.microsoft.com/en-us/products/virtual-machines/

https://learn.microsoft.com/en-us/azure/architecture/aws-professional/compute

ACS (Azure Container Service)

Retire on 31st Jan 2020.

A wrapper on top of Azure IAAS to deploy a production ready Kubernetes, DC/OS, or Docker Swarm cluster.

https://azure.microsoft.com/en-us/products/container-apps/

AKS

https://learn.microsoft.com/en-us/azure/aks/quotas-skus-regions

https://azure.microsoft.com/en-us/products/kubernetes-service/

https://github.com/hashicorp/terraform-azure-consul-ent-k8s

git clone https://github.com/lukeorellana/terraform-on-azure cd terraform-on-azure

It contains these folders:

- 01-intro

- 02-init-plan-apply-destroy

- 03-terraform-state

- 04-variables

- 05-modules

- 06-advanced-hcl

https://github.com/KevinDMack/TerraformKubernetes to establish K8S using Packer within Azure

GCE

https://www.msp360.com/resources/blog/azure-vm-vs-amazon-ec2-vs-google-ce-cloud-computing-comparison/

GCS

GKE

https://github.com/hashicorp/terraform-gcp-consul-ent-k8s

- To obtain the name of cluster (stored in custom metadata of nodes) from inside a node:

curl http://metadata/computeMetadata/v1/instance/attributes/cluster-name -H "Metadata-Flavor: Google"

CI/CD

https://github.com/fedekau/terraform-with-circleci-example

VIDEO: “Create Preview Environments for Terraform” (using GitHub Actions)

Terraspace generates IaC code

VIDEO: Terraspace.cloud dynamically generates Terraform projects in a centralized manner (which eliminates duplication). So the whole stack can be brought up by a single command: VIDEO:

terraspace up STACK

Unlike Terragrunt, Terraspace automatically creates storage Buckets in the back-end. Terraspace intermixes its own features with those of Terraform (e.g. using ERB templates in backend configuration), needed because Terraform doesn’t allow expressions in the backend block.

Terraspace claims that their CLI hook syntax is “more concise and cleaner”.

Among Terraform usage workflow stages:

4) Terraform project conventions

PROTIP: Consistent definition of HCL folders and files in your Terraform projects would enhance efficiency and teamwork.

tfvars files by env

REMEMBER: Files named with the .tfvars file ending extension contains actual values used in each environment (dev, qa, stage, prod).

Each environment has different needs. For example, the number of instances:

- In dev, env_instance_count = 1

- In qa, env_instance_count = 2

- In stage, env_instance_count = 4

- In prod, env_instance_count = 4

PROTIP: Since there can be secret values, use a mechanism that guarantees the file is never uploaded into GitHub.

The terraform.auto.tfvars file should be specified in .gitignore.

In VIDEO: “Bootstrapping Terraform Secrets with 1Password CLI”, Jillian (Wilson) Morgan shows that plaintext secrets can be replaced with a reference to 1Password protocol “op://”.

-

Within 1Password, the “devs” vault, “gcp” item, “credentials” field:

GOOGLE_CREDENTIAL=op://devs/gcp/credential

-

To populate, run a keyboard alias command that executes:

op run --env-file=.env terraform apply

References:

- https://developer.1password.com/docs/cli

- https://1password.developers

- https://join.slack.com/t/1password-devs/shared_invite/zt-1halo11ps-609pEv96xZ3LtX_VEOfJQA

References:

- https://www.terraform.io/language/modules/develop/structure

- https://www.baeldung.com/ops/terraform-best-practices

What’s HCL?

Terraform defined HCL (HashiCorp Configuration Language) for both human and machine consumption. HCL is defined at https://github.com/hashicorp/hcl and described at https://www.terraform.io/docs/configuration/syntax.html.

Terraform supports JSON syntax to read output from programmatic creation of such files. The name suffix of files containing JSON “*.tf.json”.

HCL is less verbose than JSON and more concise than YML. *

Unlike JSON and YML, HCL allows annotations (comments). As in bash scripts: single line comments start with # (pound sign) or // (double forward slashes).

Multi-line comments are wrapped between /* and */.

\ back-slashes specify continuation of long lines (as in Bash).

Files in the root folder:

The root folder of the repo should contain these files:

-

.gitignore - files and folders to not add and push to GitHub

-

LICENSE - (no file extension) to define the legal aspects (whether it’s open source)

The root folder of each module should contain these files:

-

README.md describes to humans how the module works. REMEMBER: Don’t put a README file within internal module folders because its existance determines whether a module is considered usable by an external user.

-

main.tf is the entry point of the module.

-

providers.tf specfies how to process HCL code (aws, azure, etc.)

-

outputs.tf defines data values output by a terraform run.

-

versions.tf

-

variables.tf declares a description and optional default values for each variable in *.tf files

Folders in the project:

Within folder examples

Within folder test

Within folder modules

IAM (folder)

* README.md

* variables.tf

* main.tf

* outputs.tf

Network (folder)

* …

Vault

* install-vault

* install-vault.sh

* run-vault

* run-vault.sh

* vault-cluster

* vault-security-group-rules

* vault-elb

REMEMBER: Terraform processes all .tf files in a directory invoked in alphabetical order.

Among Terraform usage workflow stages:

6) Code cloud resources in HCL

Links to Certification Exam Objectives

Pluralsight has a 20-question assessment: Managing Infrastructure with Terraform Skill IQ” covering (Google):

- Add Terraform to a CI/CD Pipeline

- Automate infrastructure deployment

-

Create and import Modules

- Implement Terraform with AWS

- Implement Terraform with Google Cloud Platform

-

Implement Terraform with Microsoft Azure

- Import data from external sources

- Install and Run Terraform

- Manage State in Terraform

- Troubleshoot Terraform Issues

This page houses both links and my notes to pass the HashiCorp Terraform Associate certification (at https://hashicorp.com/certification/terraform-associate). For only $70.50 (paid after picking a time on OSI Online, terrible) , correctly answer 70%+ of 57 multiple-choice/fill-in questions to give your employers some assurance that you have a practical knowledge of these topics:

This page houses both links and my notes to pass the HashiCorp Terraform Associate certification (at https://hashicorp.com/certification/terraform-associate). For only $70.50 (paid after picking a time on OSI Online, terrible) , correctly answer 70%+ of 57 multiple-choice/fill-in questions to give your employers some assurance that you have a practical knowledge of these topics:

- Understand infrastructure as code (IaC) concepts

a. Explain what IaC is

b. Describe advantages of IaC patterns - Understand Terraform’s purpose (vs other IaC)

a. Explain multi-cloud and provider-agnostic benefits

b. Explain the benefits of state management - Understand Terraform basics

a. Handle Terraform and provider installation and versioning

b. Describe plugin based architecture

c. Demonstrate using multiple providers

d. Describe how Terraform finds and fetches providers (from the Terraform Registry)

e. Explain when to use and not use provisioners and when to use local-exec or remote-exec - Use the Terraform CLI (outside of core workflow)

a. Given a scenario: choose when to use terraform fmt to format code

b. Given a scenario: choose when to use terraform taint to taint Terraform resources

c. Given a scenario: choose when to use terraform import to import existing infrastructure into your Terraform state

d. Given a scenario: choose when to use terraform workspace to create workspaces

e. Given a scenario: choose when to use terraform state to view Terraform state

f. Given a scenario: choose when to enable verbose logging and what the outcome/value is - Interact with Terraform modules

a. Contrast module source options

b. Interact with module inputs and outputs

c. Describe variable scope within modules/child modules

d. Discover modules from the public Terraform Module Registry

e. Defining module version - Navigate Terraform workflow

a. Describe Terraform workflow ( Write -> Plan -> Create )

b. Initialize a Terraform working directory (terraform init)

c. Validate a Terraform configuration (terraform validate)

d. Generate and review an execution plan for Terraform (terraform plan)

e. Execute changes to infrastructure with Terraform (terraform apply)

f. Destroy Terraform managed infrastructure (terraform destroy) - Implement and maintain state

a. Describe default local backend

b. Outline state locking

c. Handle backend authentication methods

d. Describe remote state storage mechanisms and supported standard backends

e. Describe effect of Terraform refresh on state

f. Describe backend block in configuration and best practices for partial configurations

g. Understand secret management in state files - Read, generate, and modify configuration

a. Demonstrate use of variables and outputs

b. Describe secure secret injection best practice

c. Understand the use of collection and structural types

d. Create and differentiate resource and data configuration

e. Use resource addressing and resource parameters to connect resources together

f. Use Terraform built-in functions to write configuration

g. Configure resource using a dynamic block

h. Describe built-in dependency management (order of execution based) - Understand Terraform Cloud and Enterprise capabilities

a. Describe the benefits of Sentinel, registry, and workspaces

b. Differentiate OSS and TFE workspaces

c. Summarize features of Terraform Cloud

VIDEO: Registering for the test takes several steps:

- Clicking on “Register Exam” takes you to the Zendesk Exam Portal.

- Read the Exam Handbook. Key points:

- 48 hour cancellation

- There is a Exam FAQ

- Click “Click here to go to the exam platform” for the “Continue with GitHub”.

- Authorize HashiCorp to use your GitHub credentials to register for exam at the PSI Exam website

- Click “Schedule” to the right of “HashiCorp Certified: Terraform Associate - Ready to Schedule”

- Select Country & Timezone. Click a day in green. Click a range of hours. Click a specific hour. Click Continue.

- In the pop-up, click Continue for “Booking created successfully”. Close.

-

Now you see the $70.50. Check “I acknowledge”… Pay Now.

-

FAQ: After passing the exam, share your badge at

-

In your resume, add a link to your certification as:

https://www.credly.com/earned/badge/[unique certification ID]

The exam expires in 2 years.

HashiCorp doesn’t have a deeper/more difficult “Professional level” cert at time of writing.

Infrastructure as Code (IaC) Provisioning Options

The objective is to accelerate work AND save money by automating the configuration of servers and other resources quicker and more consistently than manually clicking through the GUI. That’s called the “Infrastructure-Application Pattern (I-A)”.

| Since | Community | Type | Infra. | Lang. | Agent | Master | |

|---|---|---|---|---|---|---|---|

| CFN/CF | 2011 Medium | Small*1 | Immutable | Declarative | No | No | |

| Heat | 2012 Low | Small | Immutable | Declarative | No | No | |

| Terraform | 2014 Low | Huge | Immutable | Declarative | No | No | |

| Pulumi> | 2017 Low | New | Mutable | Procedural | Yes | Yes |

Terraform installs infrastructure in cloud and VM as workflows.

Kubernetes orchestrates (brings up and down) Docker containers.

Pulumi (see my notes on it)

dagger.io

Terraform vs. AWS Cloud Formation

| Feature | CloudFormation | Terraform |

|---|---|---|

| Multi-Cloud providers support | AWS only | AWS, GCE, Azure (20+) |

| Source code | closed-source | open source |

| Open Source contributions? | No | Yes (GitHub issues) |

| State management | by AWS | in Terraform & AWS S3 |

| GUI | Free Console | licen$ed* |

| Configuration format | JSON & Template | HCL JSON |

| Execution control* | No | Yes |

| Iterations | No | Yes |

| Manage already created resources | No (Change Set?) | Yes (hard) |

| Failure handling | Optional rollback | Fix & retry |

| Logical comparisons | No | Limited |

| Extensible Modules | No | Yes |

To get AWS certified, you’re going to need to know Cloud Formation.

Licensing open source for GUI

Although Terraform is “open source”, the Terraform GUI requires a license.

Paid Pro and Premium licenses of Terraform add version control integration, MFA security, HA, and other enterprise features.

References:

- https://www.stratoscale.com/blog/data-center/choosing-the-right-provisioning-tool-terraform-vs-aws-cloudformation/

CF to TF Tool

PROTIP: TOOL: cf2tf is a Python module that converts CloudFormation templates to Terraform configuration files so you use https://console.aws.amazon.com/cloudformation less. It’s by “shadycuz” Levi Blaney, author of the Hypermodern Cloudformation series.

- Beware of the CF code refactoring that another has needed to do: https://medium.com/trackit/aws-cloudformation-to-terraform-translation-dacfc96e3994

- Review issues that remain open for cf2tf: https://github.com/DontShaveTheYak/cf2tf/issues

- Install Python with Conda or virtualenv (see my

https://wilsonmar.github.io/python-install/) - Create a folder to clone into (such as $HOME/Projects).

- Create virtual Python enviornment:

conda activiate py310 python --version

- Clone the repo:

git clone https://github.com/DontShaveTheYak/cf2tf --depth 1 cd ct2tf

- Install Python module locally:

pip install cf2tf --upgrade cf2tf my_template.yaml

- Download my_template.yaml CloudFormation files that creates an AWS resource stack:

- lambda_hello.yaml from https://leaherb.com/aws-lambda-tutorial-101/ describes creating a Lambda function using CF YAML.

- ec2_stack1.yaml from https://github.com/smoya/cloudformation-hello-world/blob/master/hello_world_demo.json creates a Docker in ECR (Elastic Container Registry), RDS MySQL database, EC2 with VPC, subnet, Route, Security Group, IG, ELB, AutoScaling, CloudWatch alarms

- https://reflectoring.io/getting-started-with-aws-cloudformation/ describes creating an ECS cluster running a Docker container using CF files from https://github.com/stratospheric-dev/stratospheric/tree/main/chapters/chapter-1/cloudformation

- https://www.youtube.com/watch?v=YXVCdGyHDSk shows how to create a table with DBQueryPolicy within a pre-defined DynamoDB from https://gist.github.com/awssimplified/f96437a5a3beed65bf4782eb7b69afa4

- Validate the template within AWS:

aws cloudformation validate-template --template-body file://lambda_hello.yaml

- Make sure it really creates the stack and resource within AWS:

aws cloudformation create-stack --stack-name hello-lambda-stack \ --template-body file://lambda_hello.yml \ --capabilities CAPABILITY_NAMED_IAM

- Run:

cd /;cd ~/Projects/cf2tf cf2tf lambda_hello.yaml >main.tf

- Compare input and output I got:

| CloudFormation template.yaml | Terraform HCL |

Resources:

HelloLambdaRole:

Type: AWS::IAM::Role

Properties:

RoleName: HelloLambdaRole

AssumeRolePolicyDocument:

Statement:

- Effect: Allow

Principal:

Service: lambda.amazonaws.com

Action: sts:AssumeRole

HelloLambdaFunction:

Type: AWS::Lambda::Function

Properties:

FunctionName: HelloLambdaFunction

Role: !GetAtt HelloLambdaRole.Arn

Runtime: python3.7

Handler: index.my_handler

Code:

ZipFile: |

def my_handler(event, context):

message = 'Hello Lambda World!'

return message

| resource "aws_iam_role" "hello_lambda_role" {

name = "HelloLambdaRole"

assume_role_policy = {

Statement = [

{

Effect = "Allow"

Principal = {

Service = "lambda.amazonaws.com"

}

Action = "sts:AssumeRole"

}

]

}

}

resource "aws_lambda_function" "hello_lambda_function" {

function_name = "HelloLambdaFunction"

role = aws_iam_role.hello_lambda_role.arn

runtime = "python3.7"

handler = "index.my_handler"

code_signing_config_arn = {

ZipFile = "def my_handler(event, context):

message = 'Hello Lambda World!'

return message"

}

}

|

- Try one with more resources:

cf2tf ~/Projects/cf2tf/ec2_stack1.yaml >main.tf

- Make it work:

terraform init terraform plan terraform apply

- Verify the stack was created:

aws cloudformation describe-stacks --stack-name hello-lambda-stack

- Delete the resources so you don’t get charged:

terraform destroy

- Conform resource deletion using AWS GUI:

References:

- https://docs.aws.amazon.com/AWSCloudFormation/latest/UserGuide/GettingStarted.Walkthrough.html

- https://docs.aws.amazon.com/AWSCloudFormation/latest/UserGuide/aws-template-resource-type-ref.html

- https://dev.to/johntellsall/convert-cloudformation-to-terraform-in-two-seconds-6mm using CoPilot

- https://stackoverflow.com/questions/64048258/how-to-convert-cloudformation-template-to-terraform-code

- https://www.stratoscale.com/blog/data-center/choosing-the-right-provisioning-tool-terraform-vs-aws-cloudformation/

Installation options

A) Manually type commands in Terminal. This is tedius and time consuming because there are several utilities to install.

B) Use a GitHub Template to install utilities and create a Terraform template.

Manual install

There is a version manager to enable you to install several versions of Terraform: https://github.com/aaratn/terraenv

-

Terraform is open-sourced in GitHub. Metadata about each releases is at:

https://github.com/hashicorp/terraform/releases

PROTIP: Terraform is written in the Go language, so (unlike Java) there is no separate VM to download.

-

To download an install file for your operating system, click the list of Terraform versions at:

https://releases.hashicorp.com/terraform/

PROTIP: But instead of manually downloading, get the latest version automatically using an installer by following instructions below.

-

After installation, get the version number of Terraform:

terraform --version

The response I got (at time of writing) is the version and what operating system:

Terraform v1.1.16 on darwin_amd64

If you need to upgrade:

Your version of Terraform is out of date! The latest version is 1.1.6. You can update by downloading from https://www.terraform.io/downloads.html

Install on MacOS using tfenv

-

A search through brew:

brew search terraform

==> Formulae hashicorp/tap/consul-terraform-sync terraform-provider-libvirt hashicorp/tap/terraform ✔ terraform-rover hashicorp/tap/terraform-ls terraform@0.11 iam-policy-json-to-terraform terraform@0.12 terraform ✔ terraform@0.13 terraform-docs terraform_landscape terraform-inventory terraformer ✔ terraform-ls terraforming terraform-lsp If you meant "terraform" specifically: It was migrated from homebrew/cask to homebrew/core.

Note there are back versions of terraform (11, 12, 13, etc.).

Standard Homebrew install

-

Is there a brew for Terraform?

brew info terraform

Yes, but:

terraform: stable 1.1.6 (bottled), HEAD Tool to build, change, and version infrastructure https://www.terraform.io/ Conflicts with: tfenv (because tfenv symlinks terraform binaries) /usr/local/Cellar/terraform/1.1.6 (6 files, 66.7MB) * Poured from bottle on 2022-02-19 at 10:43:46 From: https://github.com/Homebrew/homebrew-core/blob/HEAD/Formula/terraform.rb License: MPL-2.0 ==> Dependencies Build: go ✘ ==> Options --HEAD Install HEAD version ==> Analytics install: 47,985 (30 days), 134,541 (90 days), 525,730 (365 days) install-on-request: 44,756 (30 days), 125,786 (90 days), 493,333 (365 days)

Its popularity has grown since:

terraform: stable 1.0.5 (bottled), HEAD ... install: 41,443 (30 days), 125,757 (90 days), 480,344 (365 days) install-on-request: 38,839 (30 days), 118,142 (90 days), 455,572 (365 days)

-

PROTIP: Although you can brew install terraform, don’t. So that you can easily switch among several versions installed of Terraform, install and use the Terraform version manager:

brew install tfenv

The response at time of writing:

==> Downloading https://github.com/tfutils/tfenv/archive/v2.2.0.tar.gz Already downloaded: /Users/wilson_mar/Library/Caches/Homebrew/downloads/d5f3775943c8e090ebe2af640ea8a89f99f7f0c2c47314d76073410338ae02de--tfenv-2.2.0.tar.gz 🍺 /usr/local/Cellar/tfenv/2.2.0: 23 files, 79.8KB, built in 8 seconds

Source for this is has changed over time: from https://github.com/Zordrak/tfenv (previously from https://github.com/kamatama41/tfenv)

When tfenv is used, do not install from the website or using :

brew install terraform -

Install the latest version of terraform using tfenv:

tfenv install latest

The response:

Installing Terraform v1.0.5 Downloading release tarball from https://releases.hashicorp.com/terraform/1.0.5/terraform_1.0.5_darwin_amd64.zip ######################################################################### 100.0% Downloading SHA hash file from https://releases.hashicorp.com/terraform/1.0.5/terraform_1.0.5_SHA256SUMS ==> Downloading https://ghcr.io/v2/homebrew/core/pcre/manifests/8.45 ######################################################################## 100.0% ==> Downloading https://ghcr.io/v2/homebrew/core/pcre/blobs/sha256:a42b79956773d ==> Downloading from https://pkg-containers.githubusercontent.com/ghcr1/blobs/sh ######################################################################## 100.0% ==> Downloading https://ghcr.io/v2/homebrew/core/grep/manifests/3.7 ######################################################################## 100.0% ==> Downloading https://ghcr.io/v2/homebrew/core/grep/blobs/sha256:180f055eeacb1 ==> Downloading from https://pkg-containers.githubusercontent.com/ghcr1/blobs/sh ######################################################################## 100.0% ==> Installing dependencies for grep: pcre ==> Installing grep dependency: pcre ==> Pouring pcre--8.45.mojave.bottle.tar.gz 🍺 /usr/local/Cellar/pcre/8.45: 204 files, 5.5MB ==> Installing grep ==> Pouring grep--3.7.mojave.bottle.tar.gz ==> Caveats All commands have been installed with the prefix "g". If you need to use these commands with their normal names, you can add a "gnubin" directory to your PATH from your bashrc like: PATH="/usr/local/opt/grep/libexec/gnubin:$PATH" ==> Summary 🍺 /usr/local/Cellar/grep/3.7: 21 files, 941.7KB ==> Upgrading 1 dependent: zsh 5.7.1 -> 5.8_1 ==> Upgrading zsh 5.7.1 -> 5.8_1 ==> Downloading https://ghcr.io/v2/homebrew/core/zsh/manifests/5.8_1 ######################################################################## 100.0% ==> Downloading https://ghcr.io/v2/homebrew/core/zsh/blobs/sha256:a40a54e4b686eb ==> Downloading from https://pkg-containers.githubusercontent.com/ghcr1/blobs/sh ######################################################################## 100.0% ==> Pouring zsh--5.8_1.mojave.bottle.tar.gz 🍺 /usr/local/Cellar/zsh/5.8_1: 1,531 files, 13.5MB Removing: /usr/local/Cellar/zsh/5.7.1... (1,515 files, 13.3MB) ==> Checking for dependents of upgraded formulae... ==> No broken dependents found! ==> Caveats ==> grep All commands have been installed with the prefix "g". If you need to use these commands with their normal names, you can add a "gnubin" directory to your PATH from your bashrc like: PATH="/usr/local/opt/grep/libexec/gnubin:$PATH" Unable to verify OpenPGP signature unless logged into keybase and following hashicorp Archive: tfenv_download.qXFIgg/terraform_1.0.5_darwin_amd64.zip inflating: /usr/local/Cellar/tfenv/2.2.2/versions/1.0.5/terraform Installation of terraform v1.0.5 successful. To make this your default version, run 'tfenv use 1.0.5'

PROTIP: The above commands create folder .terraform.d on your $HOME folder, containing files

checkpoint_cacheandcheckpoint_signature.See HashiCorp’s blog about version announcements.

-

Make the latest the default:

tfenv use 1.0.5

Switching default version to v1.0.5 Switching completed

-

Proceed to Configuration below.

Install on Windows

- In a Run command window as Administrator.

- Install Chocolatey cmd:

-

Install Terraform using Chocolatey:

choco install terraform -y

The response at time of writing:

Chocolatey v0.10.8 Installing the following packages: terraform By installing you accept licenses for the packages. Progress: Downloading terraform 0.10.6... 100% terraform v0.10.6 [Approved] terraform package files install completed. Performing other installation steps. The package terraform wants to run 'chocolateyInstall.ps1'. Note: If you don't run this script, the installation will fail. Note: To confirm automatically next time, use '-y' or consider: choco feature enable -n allowGlobalConfirmation Do you want to run the script?([Y]es/[N]o/[P]rint): y Removing old terraform plugins Downloading terraform 64 bit from 'https://releases.hashicorp.com/terraform/0.10.6/terraform_0.10.6_windows_amd64.zip' Progress: 100% - Completed download of C:\Users\vagrant\AppData\Local\Temp\chocolatey\terraform\0.10.6\terraform_0.10.6_windows_amd64.zip (12.89 MB). Download of terraform_0.10.6_windows_amd64.zip (12.89 MB) completed. Hashes match. Extracting C:\Users\vagrant\AppData\Local\Temp\chocolatey\terraform\0.10.6\terraform_0.10.6_windows_amd64.zip to C:\ProgramData\chocolatey\lib\terraform\tools... C:\ProgramData\chocolatey\lib\terraform\tools ShimGen has successfully created a shim for terraform.exe The install of terraform was successful. Software installed to 'C:\ProgramData\chocolatey\lib\terraform\tools' Chocolatey installed 1/1 packages. See the log for details (C:\ProgramData\chocolatey\logs\chocolatey.log).

- Proceed to Configuration below.

Install on Linux

-

https://github.com/migibert/terraform-role Ansible role to install Terraform on Linux machines

-

https://github.com/hashicorp/docker-hub-images/tree/master/terraform builds Docker containers for using the terraform command line program.

To manually install on Ubuntu:

-

On a Console (after substituing the current version):

sudo curl -O https://releases.hashicorp.com/terraform/0.12.0/terraform_0.12.0_linux_amd64.zip sudo apt-get install unzip sudo mkdir /bin/terraform sudo unzip terraform_0.11.5_linux_amd64.zip -d /usr/local/bin/

Install on Linux using Docker

-

To install Docker CE on Linux:

sudo apt-get update sudo apt-get install \ apt-transport-https \ ca-certificates \ curl \ software-properties-common curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add - sudo add-apt-repository \ "deb [arch=amd64] https://download.docker.com/linux/ubuntu \ $(lsb_release -cs) \ stable" sudo apt-get update sudo apt-get install docker-ce

Configure Terraform logging

-

To set a level of logging (similar to Log4j’s INFO < WARNING < ERROR < DEBUG < TRACE to see Terraform’s internal logs):

export TF_LOG=TRACE

-

Define where logs are saved:

export TF_LOG_PATH=/tmp/terraform.log

-

Define the above settings in a shell file used to call Terraform.

Install Utilities

You’ll need a text editor with plugins to view HCL:

VSCode

-

Use VSCode (installed by default) to view blocks in Terraform HCL files:

cd ~/clouddrive/terraform-on-azure/02-init-plan-apply-destroy/01-intro code main.tf

-

In VSCode, press shift+command+X or click the bottom-left menu icon and select “Extensions” to select the add-on from HashiCorp

-

If you use Azure, install the “Azure Terraform” extension from Microsoft.

CAUTION: Avoid installing anything from publishers you don’t know.

-

Define .gitignore for use with VSCode:

-

Review code:

NOTE: Each key-value pair is an argument containing an expression of a text value.

Each HCL file needs to specify the (cloud) provider being used is “azure”.

NOTE: Multiple providers can be specified in the same HCL file.

Each Provider is a plugin that enables Terraform to interface with the API layer of various cloud platforms and environments.

-

Search for “Resource Group” in Terraform’s Azure Provider docs:

https://registry.terraform.io/providers/hashicorp/azurerm/latest/docs

for “azurerm_resource_group”.

JetBrains add-ins

https://plugins.jetbrains.com/plugin/7808-terraform-and-hcl

Issues to look for

There are several industry standards which prescribe “controls” and configurations:

- AWS Foundations referenced by the AWS Security Hub service

-

CIS

- SOC2

- ISO

-

FedRAMP

- PCI

- HIPAA

- NIST

- Hightrust

- etc.

The trouble with written policies and standards is that they are in PDF and Excel files. So few read them.

Terraform Enterprise TFLint

An important distinction between Cloud Formmation and Terraform is that Terraform tracks the state of each resource.

Terraform Enterprise automatically stores the history of all state revisions. https://www.terraform.io/docs/state

VIDEO: Terraform Enterprise has producers (experts) and read-only consumers. Terraform Enterprise processes HCL with auditing policies like linter https://github.com/terraform-linters/tflint, installed on Windows using choco install tflint. See https://spin.atomicobject.com/2019/09/03/cloud-infrastructure-entr/

[8:25] Terraform Enterprise enforces “policy as code” which automates the application of what CIS (Center for Internet Security) calls (free) “benchmarks” – secure configuration settings for hardening operating systems, for AWS settings at (the 155 page) https://www.cisecurity.org/benchmark/amazon_web_services/.

- Set to public instead of private?

Terratest from Gruntwork.

https://itnext.io/automatic-terraform-linting-with-reviewdog-and-tflint-f4fb66034abb

Programs processing Policy as Code

PROTIP: To prevent vulnerabilities before they are manifested in resources on the internet, several groups have created programs which can automatically attest to whether a Terraform file actually meets or violates specific policies defined as code.

This enables a CI/CD pipeline to stop processing if a Terraform file fails a scan.

github.com/iacsecurity/tool-compare details each policy check and which tool performs them:

-

OSS Python-based Checkov by Bridgecrew.io (acquired by Palo Alto Networks)

-

Fremium Indeni Cloudrail

-

OSS Go-based Kics (Keeping Infrastructure as Code Secure) by Checkmarx

-

Freemium Snyk

-

OSS Terrascan by Accurics.

-

OSS Go-based Tfsec by Aqua Security has a VSCode extension (/usr/local/Cellar/tfsec/0.56.0: 5 files, 16.9MB)

-

https://github.com/accurics/terrascan uses Rego policies

-

SonarQube

-

Terraform FOSS with Atlantis

-

Terraform Enterprise Sentinel

STAR: Rob Schoening presents an evaluation of the above tools.

Post deployment, Pulumi finds unused resources daily and shut them down.

Install Security Scanners

https://github.com/iacsecurity/tool-compare lists specific tests (of vulnerability) and which products can detect each.

Checkov is an OSS static scanner of Terraform, AWS Cloud Formation, and Azure ARM templates.

Cloudrail from Indeni is a freemium scanner utility which audits Terraform IaC code for security concerns. It calls itself “context-aware” because (although Terratest requires that you deploy the infra and run tests against the live infra), Cloudrail takes a hybrid (SAST+DAST) approach - parsing static TF files into a database (of resources in a python object) and “continuously” comparing that against the live infrastructure in a separate python object fetched dynamically using their Dragoneye data collector (for AWS and Azure).

When run on local environments, security scanning achieves “shift left”.

Install Checkov scanner

- If you prefer using Conda, please install that up and setup an environment.

-

The Terraform files can be analyzed (before they become resources) using static scanners TFSec or Checkov (Twitter: #checkov</a>):

pip3 install -U checkov checkov --help

- Expand your Terminal to full screen.

-

Let’s start by scanning a single tf file within terragoat/terraform/aws:

checkov -f db-app.tf > db-app.txt

It takes several minutes.

> db-app.txt above sends the output to a new file. If the file already exists, it overwrites the previous run.

Checkov is “freemium” to the licensed Bridgecrew platform, the program asks:

Would you like to “level up” your Checkov powers for free? The upgrade includes: • Command line docker Image scanning • Free (forever) bridgecrew.cloud account with API access • Auto-fix remediation suggestions • Enabling of VS Code Plugin • Dashboard visualisation of Checkov scans • Integration with GitHub for: ◦ Automated Pull Request scanning ◦ Auto remediation PR generation • Integration with up to 100 cloud resources for: ◦ Automated cloud resource checks ◦ Resource drift detection and much more... It's easy and only takes 2 minutes. We can do it right now! To Level-up, press 'y'... Level up? (y/n): _

-

Edit the output file.

_ _ ___| |__ ___ ___| | _______ __ / __| '_ \ / _ \/ __| |/ / _ \ \ / / | (__| | | | __/ (__| < (_) \ V / \___|_| |_|\___|\___|_|\_\___/ \_/ By bridgecrew.io | version: 2.0.829 Update available 2.0.829 -> 2.0.873 Run pip3 install -U checkov to update terraform scan results: Passed checks: 12, Failed checks: 14, Skipped checks: 0 Check: CKV_AWS_211: "Ensure RDS uses a modern CaCert" PASSED for resource: aws_db_instance.default File: /db-app.tf:1-41

As of this writing, Checkov has 50 built-in checks. Each check has a Guide at https://docs.bridgecrew.io/docs/general-policies which defines recommended Terraform coding.

-

Remove the file to save disk space.

-

Scan a directory (folder), such as from Terragoat:

checkov -d aws

Install full-fast-fail scanner

This library is not yet in Homebrew, so:

git clone https://github.com/JamesWoolfenden/full-fast-fail --depth 1 cd full-fast-fail ./checker.sh

Terragoat for learning

(It’s in the same vein as RhinoLabs’ penetration testing training tool, CloudGoat.)

-

Get it on your laptop after navigating to a folder:

git clone https://github.com/bridgecrewio/terragoat --depth 1 cd terragoat/terraform

-

Vulnerabilities designed into Terragoat are for specific services in AWS, Azure, and GCP clouds. Let’s look at aws services:

ls aws

Response:

db-app.tf - database application ec2.tf ecr.tf - elastic Kubernetes service eks.tf - elastic Kubernetes service elb.tf - elastic load balancer es.tf iam.tf kms.tf - key management service lambda.tf neptune.tf rds.tf - relational database service xs3.tf - key management service

PROTIP: BLAH: These are a few of the 200+ AWS services.

QUESTION: How will you know when new AWS services become available or deprecated?

Known-bad IaC for training

-

To use the Terraform to create resources, I created a setup.sh based on CLI code in this README.md file.

-

Edit my setup.sh file to override default values in file consts.tf:

- “acme” for company_name in TF_VAR_company_name

- “mydevsecops” for environment in TF_VAR_environment

- TF_VAR_region

-

Edit my setup.sh file to override default values in file providers.tf:

alias = "plain_text_access_keys_provider" region = "us-west-1" access_key = "AKIAIOSFODNN7EXAMPLE" secret_key = "wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY"

SECURITY WARNING: Replace key values with a variable name.

https://github.com/bridgecrewio/terragoat#existing-vulnerabilities-auto-generated

-

Sign up for the #CodifiedSecurity Slack community (confirm by email).

, and #airiam,

https://medium.com/bridgecrew/terragoat-vulnerable-by-design-terraform-training-by-bridgecrew-524b50728887

Gruntwork’s sample

Gruntwork.io offers (for $795/month = $9,500/year), access to their 250,000-line Reference Architecture of (opinionated) starter code to create a production-worthy “defense in depth” setup on AWS:

An additional $500 a month gets you access to their Reference Architecture Walktrough video class. But previews of the class is free:

The Gruntwork Production Framework organizes app solutions for going to production on the public cloud:

For those who can’t subscribe, Yevegeniy (Jim) Brikman (ybrikman.com, co-founder of DevOps as a Service Gruntwork.io) has generously shared:

-

https://github.com/brikis98/infrastructure-as-code-talk/tree/master/terraform-configurations

-

https://github.com/brikis98/terraform-up-and-running-code provides bash scripts to run on Ubuntu server to install Apache, PHP, and a sample PHP app on an Ubuntu server. It also has automates tests written in Ruby script to make sure it returns “Hello, World”. The repo is referenced by the book Terraform Up & Running (OReilly book $11.99 on Amazon) and website:

terraformupandrunning.com

The sample scripts referenced by this tutorial contain moustache variable mark-up so that you can generate a set for your organization.

-

https://www.terraform.io/docs/providers/azurerm/r/virtual_machine_scale_set.html

-

https://training.gruntwork.io/courses/reference-architecture-walkthrough/lectures/4211191

Cloudposse

https://github.com/cloudposse has mostly AWS Terraform, such as https://github.com/cloudposse/load-testing

Standard Files and Folders Structure