bomonike

Overview

- Why This?

- PCEP-30-02 Exam Outline

- Debugging using IDE

- Pydantic

- Use Python Code Scans

- Lexis

- Built-in Methods/Functions

- print, printf, echo

- While Loop

- Magic underlines

- Operators

- What Day and Time is it?

- Run Duration calculations

- Swapping

- Sorting

- Avoid divide by zero errors

- Random

- Environment Variable Cleansing

- Object-oriented class functions

- Blob vs. File vs. Text

- GUI

- Cloud

- Web Scraper

- Movie Recommender

- GCP

- OpenCV

- GIS

- String Handling

- Regular Expressions

- Unicode Superscript & Subscript characters

- Functions

- Internationalization & Localization (I18N & L18N)

- Version management

- File open() modes

- File Copy commands

- Error Exception handling

- Operating system

- Command generator

- CLI code enhancement

- Handling Arguments

- Python in the Cloud

- Sets: Day of week Set handling

- Lists

- Tuples

- Range

- List comprehension

- Classes and Objects

- Protocols

- Secure coding

- Insecure code in Pygoat

- Logging for Monitoring

- Use assert only during testing

- Concurrency Programming

- Bit-wise operators

- Stegnography

- Parallel Computing

- ODBC

- Referenes

- CS50P Harvard

- Cybrary.it

- CS50 Python class at Project STEM

- Streamlit

- Docstrings

- Google Style Docstrings

- Compilers

- More about Python

NOTE: Content here are my personal opinions, and not intended to represent any employer (past or present). “PROTIP:” here highlight information I haven’t seen elsewhere on the internet because it is hard-won, little-know but significant facts based on my personal research and experience.

This is the last in my series of articles about Python:

-

Put learning and creativity to work on the python-samples.py program described at:

wilsonmar.github.io/python-samples -

Handle the intricacies of installing Python and associated utilities (pyenv, pip, venv, conda, etc.) at:

wilsonmar.github.io/python-install -

Handle the intricacies of installing Jupyter which runs Python at:

wilsonmar.github.io/jupyter -

Know who provides Python coding tutorials at:

wilsonmar.github.io/python-tutorials -

Analyze the topics covered in certification tests at:

wilsonmar.github.io/python-certs -

Know Python language coding tricks and techniques at:

wilsonmar.github.io/python-coding

Why This?

In my python-tutorials page I list the many tutorials on YouTube and paid subscription channels.

What I don’t like about them I aim to fix on this page.

-

Over-emphasis on games rather than practical usage that improves productivity, etc. Making trivial games cheapens Python as if it doesn’t matter.

-

Assumption that people invoke Python programs and wait to manually answer input() commands. In real life, Python programs are executed automatically. So provide data in CLI invocation parameters and CSV files. Verify whether all input data is correct before making changes.

-

Lack of security features, such as retrieving API keys from a memory variable or (better yet) from a Key Vault rather that having them hard-coded in the program source code.

-

Over-emphasize the use of a particular IDE add-ons which those who use Google Colab can’t take advantage of. The most popular IDEs for Python are:

-

VSCode from Microsoft (free) has add-ons for Python, but some fear vulnerabilities from unknown authors. BLOG: Setup VSCode for Python Development https://code.visualstudio.com/docs/editor/extension-marketplace

-

PyCharm (FREE or PRO $89/$71/$53 year)

-

Cloud9 free on-line on AWS (which automatically generates new credentials every 5 minutes or on browser Reset*)

-

Stryker?

-

PCEP-30-02 Exam Outline

This article contains topic names and links to the PCEP™ – Certified Entry-Level Python Programmer (Exam PCEP-30-02) last updated: February 23, 2022: Sections:

- 7 items (18%) Computer Programming and Python Fundamentals

- 8 items (29%) Control Flow – Conditional Blocks and Loops

- 7 items (25%) Data Collections – Tuples, Dictionaries, Lists, and Strings

- 8 items (28%) Functions and Exceptions

1: Computer Programming and Python Fundamentals

1.1 – Understand fundamental terms and definitions

- interpreting and the interpreter, compilation and the compiler

- language elements,

- lexis, syntax analysis (parsing), semantics (applying language rules such as type mismatch)

- Python keywords, instructions

- indenting

- REPL (Read Evaluate Print Loop interactive), control-D to exit()

- comments. PROTIP: Text between triple-doublespace are actually string objects in the byte code

- literals: Boolean, integer, floating-point numbers, scientific notation, strings. b’data’ literals can be split().

- the print() function

- the input() function

- numeral systems (W: binary, octal, decimal, hexadecimal) W, *

- numeric operators: ** * / % // + – // is floor division. Py3: Division always returns a float. Num (mod) % 2 is 0 for odd, 1 for even

- string operators: * +

- assignments and shortcut operators

2: Data Types, Evaluations, and Basic I/O Operations (20% - 6 exam items)

- operators: unary and binary, priorities, and binding

- bitwise operators VIDEO: ~ & ^ | << >> (Mandelbrot)

- Boolean operators: not and or

- Boolean expressions (True/False)

- relational operators ( == != > >= < <= ), building complex Boolean expressions

-

accuracy of floating-point numbers 4.5e9 == 4.5 * (10 ** 9) == 4.5E9 == 4.5E+9

- basic input and output operations using the input(), print(), int(), float(), str(), len() functions

- formatting print() output with end= and sep= arguments

- type casting

- basic calculations

- simple strings: constructing, assigning, indexing, slicing comparing, immutability

>>> "{} {} cost ${}".format(6, "bananas", 1.74 * 6)<br />

'6 bananas cost $10.44'

2: Control Flow Control – Conditional Blocks and Loops (29% - 8 exam items)

2.1 – Make decisions and branch the flow with the if instruction

- conditional statements: if, if-else, if-elif, if-elif-else

- multiple conditional statements

- nesting loops and conditional statements

2.2 – Perform different types of iterations

- the pass instruction

- building loops: while, for, range(), in

- iterating through sequences

- expanding loops: while-else, for-else

- controlling loop execution: break, continue

3: Data Collections – Lists, Tuples, and Dictionaries (25% - 7 exam items)

3.1 – Collect and process data using lists

- simple lists: constructing vectors

- indexing and slicing

- the len() function

- lists methods: indexing, slicing, basic methods (append(), insert(), index()) and functions (len(), sorted(), etc.), del instruction, iterating lists with the for loop, initializing,

-

in and not in operators, list comprehension, copying and cloning

- lists in lists: matrices and cubes

3.2 – Collect and process data using tuples

- tuples: indexing, slicing, building, immutability

- tuples vs. lists: similarities and differences, lists inside tuples and tuples inside lists

3.3 Collect and process data using dictionaries

- dictionaries: building, indexing, adding and removing keys, iterating through dictionaries as well as their keys and values, checking key existence, keys(), items() and values() methods

3.4 - Operate with Strings

- strings ASCII, UNICODE, UTF-8 (rendered/transmitted as pairs of bytes in norsk.encode(“utf-8”)

- indexing, slicing, immutability

- escaping using the \ character

- quotes and apostrophes inside strings

- multiline strings

-

basic string functions & methods: upper(), lowe

- copying vs. cloning, string vs. string, string vs. non-string,

4: Functions and Exceptions (20% - 6 exam items)

4.1 – Decompose the code using functions

- defining and invoking your own functions and generators

- The return and yield keywords, returning results,

- the None keyword (instead of return 0)

- recursion

4.2 – Organize interaction between the function and its environment

- parameters vs. arguments,

- positional keyword and mixed argument passing,

- default parameter values

- converting generator objects into lists using the list() function

- name scopes, name hiding (shadowing), the global keyword

4.4 – Basics of Python Exception Handling

All instances in Python must be instances of a class that derives from BaseException. Before using a divide operator:

try:

a = 10/0

print (a)

except ArithmeticError:

print ("microbit-001: This raises an arithmetic exception.")

else:

print ("Success.")

- try-except / the try-except Exception

- ordering the except branches

- propagating exceptions through function boundaries

- delegating responsibility for handling exceptions

References about Exception Handling:

4.3 – Python Built-In Exceptions Hierarchy

locals()[‘builtins’]

Python inherits from the Exceptions class.

- BaseException

The BaseException class includes a with_traceback(tb) method which explicitly sets the new traceback information to the tb argument that was passed to it.

- Exception is most commonly inherited type.

- ArithmeticError when attempting to divide by zero, or when an arithmetic result would be too large for Python to accurately represent.

- AssertionError when assert statements fail

- FloatingPointError

- OverflowError

- ZeroDivisionError

- AssertionError

- Exception is most commonly inherited type.

- SystemExit

- KeyboardInterrupt when the user presses Ctrl+C or other key combination that causes an interrupt to the executing script

Abstract exceptions:

- ArithmeticError

- LookupError

- IndexError

- KeyError

- TypeError

- ValueError

Debugging using IDE

See key/value pairs without typing print statements in code, like an Xray machine:

- Click next to a line number at the left to set a Breakpoint.

- Click “RUN AND DEBUG” to see variables: Locals and Globals.

- To expand and contract, click “>” and “V” in front of items.

- “special variables” are dunder (double underline) variables.

- Under each “function variables” and special variables of their own. For a list, it’s append, clear, copy, etc.

- Under Globals are its special variable (such as file for the file path of the program) and class variables, plus an entry for each class defined in the code (such as unittest).

Pydantic

Pydantic at docs.pydantic.dev) is the most widely used data validation library for Python.

Use pydantic when you’re not in control of the data input.

Fast and extensible, Pydantic plays nicely with your linters/IDE/brain. Define how data should be in pure, canonical Python 3.8+; validate it with Pydantic. Its success means it suffers from feature creep. There’s a temptation to move other classes over to pydantic, just because pydantic also includes serialization.

It leans heavily on use of type hinting, which makes custom validation more complex than perhaps necessary.

So check if you can get away with dataclasses.

Use Python Code Scans

mypy

Static Application Security Testing (SAST) looks for weaknesses in code and vulnerable packages.

Dynamic Application Security Testing (DAST) looks for vulnerabilities that occur at runtime.

https://www.statworx.com/en/content-hub/blog/how-to-scan-your-code-and-dependencies-in-python/

A. PEP8 “lints” program code for violations of the PIP.

Other formaters: blake, ruff.

B. Bandit (open-sourced at https://github.com/PyCQA/bandit) scans python code for vulnerabilities. It decomposes the code into its abstract syntax tree and runs plugins against it to check for known weaknesses. Among other tests it performs checks on plain SQL code, which could provide an opening for SQL injections, passwords stored in code and hints about common openings for attacks such as use of the pickle library. Bandit is designed for use with CI/CD:

bandit -c bandit_yml.cfg /path/to/python/files

The bandit_yml.cfg configuration file contains YAML lines such as this to specify types of assertion to skip.

# bandit_cfg.yml

skips: ["B101"] # skips the assert check

Bandit throws an exit status of 1 whenever it encounters any issues, thus terminating the pipeline.

The report it generates include the number of issues separated by confidence and severity according to three levels: low, medium, and high.

C. safety checks for dependencies containing vulnerabilities (CVEs) identified.

https://pypi.org/project/scancode-toolkit/

D. Scancode ScanCode scans Python code for license, copyright, package and their documented dependencies and other interesting facts.

E. GitHub’s Advanced Security scans Python code based on CodeQL logic specifications.

https://7451111251303.gumroad.com/l/wotve

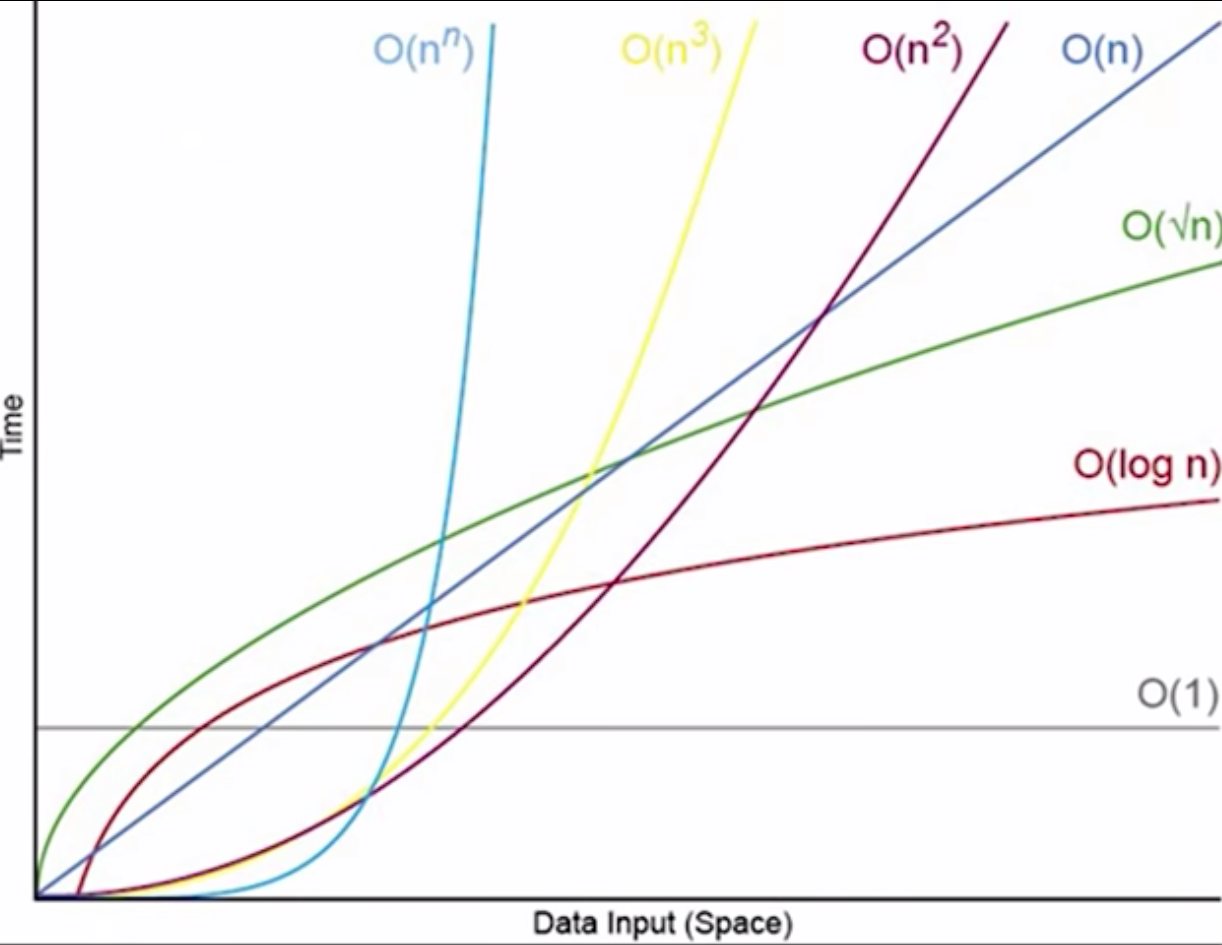

Time Complexity Big Oh notation

There is time complexity, data complexity, etc.

Big-O notation summarizes Time Complexity analysis, which estimates how long it can take for an algorithm to complete based on its structure. That’s worst-case, before optimizations such as memoization.

From https://bigocheatsheet.com, in the list of Big O values for sorting:

BigO References: VIDEO

- VIDEO Cheet Sheat & bootcamp from ZeroToMastery.io</a> Python Solutions:

- https://www.youtube.com/watch?v=x2CRZaN2xgM by ByteByteGo (the best)

- https://github.com/theja-m/Data-Structures-and-Algorithms

- https://github.com/VicodinAbuser/ZTM-DS-and-Algo-Python

- https://www.youtube.com/watch?v=v4cd1O4zkGw by HackerRank - rules

- https://www.youtube.com/watch?v=V6mKVRU1evU

- https://www.youtube.com/watch?v=zUUkiEllHG0

- https://www.youtube.com/watch?v=oJ5s2hs_cKk

- https://www.youtube.com/watch?v=itn09C2ZB9Y

- https://www.youtube.com/watch?v=jUy5N-3RAjo

- https://www.youtube.com/watch?v=kS_gr2_-ws8

- https://www.youtube.com/watch?v=QnRx6V8YQy0

-

https://www.youtube.com/watch?v=tNrNLoCqzco

- https://www.youtube.com/watch?v=TWIlXV1z3gk combinations of 77 items (asymptope) to put in backpack based on weight - genetic algorithm with mutation.

- https://www.youtube.com/watch?v=nhT56blfRpE Python code for genetic algorithm & https://www.youtube.com/watch?v=4XZoVQOt-0I

- https://www.youtube.com/watch?v=aOsET8KapQQ gen music with human rating

Let’s go from the most efficient (at the bottom-right) to the least efficient at the upper-left, where n is the number of input items in the list being processed:

-

O(1) or n0 is constant run time as more data (n) is processed. This happens when a lookup is done rather than calculating. Examples are the push, pop, lookup of an array; insert or remove a hash map/set.

Use of “memoization” is ideal, but is typically not possible for some algorithms.

0Use of Modulus would result in “O(n)” (linear) growth in time to run as the dataset grows.

- O(n) or (n1) linear time occurs the increase in list size (n) increases the number of steps in direct proportion to the input size. VIDEO: This happens when

- values within an array are summed together, in a nested loop through all elements.

- during an exhaustive search though every item. ``` nums = [1,2,3] sum(nums) # sum the array print(100 in nums) # search nums.insert(1, 100) # insert in the middle nums.remove(100) # remove from the middle

import heapq heapq.heapify(nums) # to build heap

# nested loop (monotonic stack or sliding window) ```

- O(log n) or log2n - logarithmic time VIDEO: occurs during binary search, (like ripping up portions of a phone book) where steps increase at a slower rate than input list size:

| List | Steps | | 1 | 1 | | 10 | 4 | | 100 | 7 | | 1000 | 10 | | .... | 35 | horizontal asymtope - O(n2) - n squared quadratic time VIDEO: occurs in a nested loop when steps increase in proportion to the input size squared (to the power of 2). A selection sort starts from the front of the list, and looks at each unordered item to find the next smallest value in the list and swapping it with the current value. This is also when the minimax algorithm is used.

| List | Steps | | 1 | 1 | | 10 | 45 | | 100 | 4950 | | 1000 | 499500 |The standard form, When graphed, a quadratic equation forms a parabola - a U-shaped curve used to illustrate trajectories of moving objects, areas of shapes, and in financial calculations. 𝑎𝑥2 + 𝑏𝑥 + 𝑐 = 0

-

nk - such as n3 and higher degree polynomials are called “Polynomial time” to group run times which do not increase faster than nk.

-

“Superpolynomial time” describes any run time that increase faster than nk, below:

-

O(nn) - exponential time occurs when a algorithm looks at every permutation of values, such as all possible value which brute-force guessing passwords. For example, 28 to the power of 8 is when guessing 8 positions of 28 alphanumatic characters. When 10 number values and special characters are added for 98 possible values, it’s 98 to the power of 8, a very large number. Such are considered “unreasonable” to make it harder to brute-force guess.

-

O(n!) factorial time VIDEO: where 5! = 5x4x3x2x1 - the product of all positive integers less than or equal to n. Factorials are used to represent permutations and combinations. Factorials determine the number of possible topping combinations - graph problems such as the “Traveling Salesman”.

Used to purposely create complex calculations, such as for encryption.

The asymptope is when a number reaches an extremely large number that is essentially infinite.

Depth-first trees would have steeper (logarithmic) Time Complexity.

References:

- KhanAcad explanation of run-time efficiency.

- https://www.youtube.com/watch?v=7VHG6Y2QmtM

GitHub repos with the highest stars:

- https://github.com/joeyajames/Python with YouTube explanations

- https://github.com/TheAlgorithms/Python

- https://github.com/geekcomputers/Python

- https://github.com/Show-Me-the-Code/python

- https://github.com/flypythoncom/python

Faster routes to machine code

By default, Python comes with the CPython interpreter (command cythonize) to generate machine-code. When speed is needed, such as in loops, custom C/C++ extensions are created. Additional speed is obtained by adding before nested loop code directives and decorators:

# cython: language_level=3, boundscheck=False, wraparound=False

import cython

@cython.locals(i=cython.int,j=cython.int,a=list[cython.int],b=list[cython.int])

VIDEO: benchmarks Numba, mypyc, Taichi (the fastest). Alternately, code compiled using Codon by Exaloop tool “41,212 times faster” than the standard Python interpreter.

Condon is a new python compiler that uses the LLVM framework to compile directly to machine code. Condon can also make use of the thousands of processors on a GPU to process matrix, graphical, and mathematical operations without using a library like numpy, scikit-learn, scipy, and game library pygame. However, Conda cannot use modules like typing functools such as wraps, which provides contextual information for decorators.

Lexis

From https://learning.oreilly.com/library/view/python-in-a/0596100469/ch04s01.html

The syntax of the Python programming language is the set of rules that defines how a Python program will be written and interpreted (by both the runtime system and by human readers). The Python language has many similarities to Perl, C, and Java. However, there are some definite differences between the languages. It supports multiple programming paradigms, including structured, object-oriented programming, and functional programming, and boasts a dynamic type system and automatic memory management.

Python’s syntax is simple and consistent, adhering to the principle that “There should be one— and preferably only one —obvious way to do it.” The language incorporates built-in data types and structures, control flow mechanisms, first-class functions, and modules for better code reusability and organization. Python also uses English keywords where other languages use punctuation, contributing to its uncluttered visual layout.

The language provides robust error handling through exceptions, and includes a debugger in the standard library for efficient problem-solving.

Python’s syntax, designed for readability and ease of use, makes it a popular choice among beginners and professionals alike.

Reserved Keywords

VIDEO: W: Here are the keywords Python has reserved for itself, so they can’t be used as custom identifiers (variables).

- _ (soft keyword)

- and

- as

- assert

- async

- await

- break - force escape from for/while loop

- case (soft keyword)

- class

- continue - force loop again next iteration

- def - define a custom function

- del - del list1[2] # delete 3rd list item, starting from 0.

- elif - else if

- else

- except

- False - boolean

- finally - of a try

- for = iterate through a loop

- from

- global = defines a variable global in scope

- if

- import = make the specified package available

- in

- is

- lambda - if/then/else in one line

- match (soft keyword)

- None - absence of value.

- nonlocal

- not

- or

- pass - (as in the game Bridge) instruction to do nothing (instead of return or yield with value)

- raise - raise NotImplementedError() throws an exception purposely

- return

- True - Boolean

- try - VIDEO

- while

- with

- yield - resumes after returning a value back to the caller to produce a series of values over time.

NOTE: match, case and _ were introduced as keywords in Python 3.10.

The list above can be retrieved (as an array) by this code after typing python for the REPL (Read Evaluate Print Loop) interactive prompt:

from keyword import kwlist, softkwlist

def display_keywords() -> None: 1usage

print('Keywords:') # not alphabetically

for i, kw in enumerage(kwlist, start=1):

print(f'{i:2}: {kw})

print('Software keywords')

for i, skw in enumerate(softwlist, start=1):

print(f'{i:2}: {skw}')

def main() -> None: 1usage

display_keywords()

if __name__ == '__main__':

main()

Soft keywords:

- _ (magic)

- cate

- match

- type (added by Python 3.12)

Press control+D to exit anytime.

Built-in Methods/Functions

Don’t create custom functions with these function names reserved.

Know what they do. See https://docs.python.org/3/library/functions.html

- abs() = return absolute value

- any()

- all()

- ascii()

- bin()

- bool() = convert to boolean data type

- bytearray()

- callable()

- bytes()

- chr()

- compile()

- classmethod()

- complex()

- delattr()

- dict()

- dir()

- divmod()

- enumerate()

- staticmethod()

- filter()

- eval() = dynamically execute code

- float() = convert to floating point data type

- format()

- frozenset()

- getattr() = get attribute

- globals()

- exec()

- hasattr()

- help()

- hex() = hexadecimal counting

- hash()

- input() from human user

- id()

- isinstance() - checks if the object (first argument) is an instance or subclass of classinfo class (second argument). True/False

- int() = integer

- issubclass()

- iter()

- list() = function

- locals()

- len([1, 2, 3]) is 3.

- max() = maximum value

- min() = minimum value

- map()

- next()

-

memoryview()

- object()

- oct() = octa (8) counting

- ord()

- open()

- pow()

- print() = output to CLI terminal

-

property()

- range()

- repr()

- reversed()

-

round()

- set()

- setattr()

- slice() - extract substring

- sorted()

- str() = convert to string data type

-

sum()

- tuple() Function

- type() = display the type

- vars()

- zip() = combine two interable arrays

- import()

- super()

The first thing that most tutorials cover is this:

print, printf, echo

PROTIP: Don’t just print out the value. Include the variable name, such as:

print("=== var1=",var1)

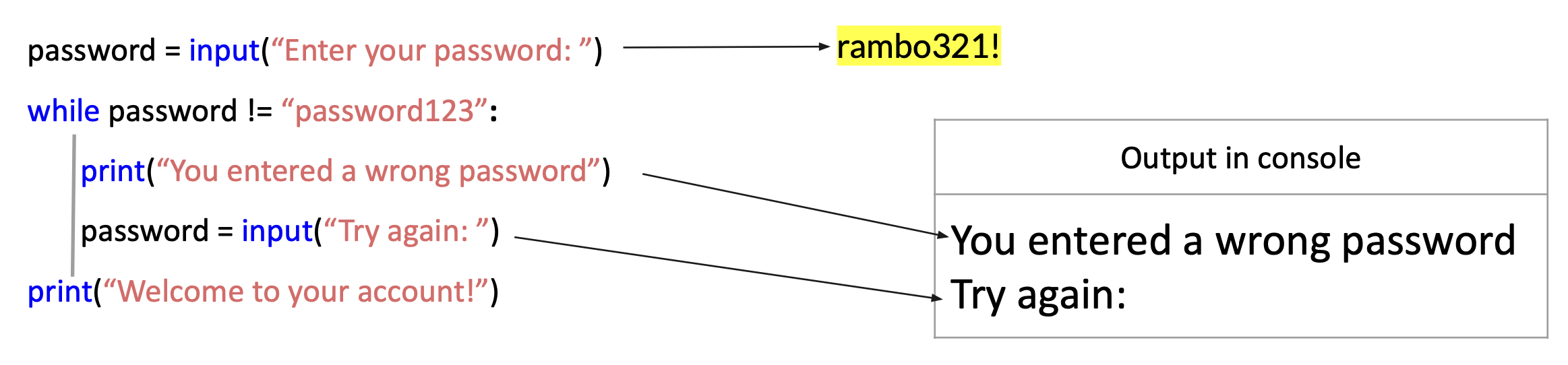

While Loop

CAUTION: What’s wrong with this sample code?

PROTIP: Passwords and other secrets should not be requested in an input() prompt because that would expose the passwords in CLI logs.

PROTIP: Passwords and other secrets should not be stored in programming code.

PROTIP: The way to verify passwords is not to store them as the raw password which the user typed in but as a hash of what the password the user typed in. The hash would also be created with a “salt” to ensure randomness. To verify whether the user provides the correct password, the program would add the salt to calculate the hash the user provides, then compare the two.

PROTIP: The user should be provided with a set limited number of tries. When exceeded, the user and IP address used should be locked out, entered in central (SIEM) security logs, and reported as a Security incident.

Magic underlines

VIDEO from idently.co: Underlines in numbers are ignored by Python:

n: int = 1_000_000_000

Specify command separator:

num: float = 1_000_000_000.342

print(f'{num:_.3f}')

Right-align 20 spaces:

print(f'{var:>20}')

Center align within 20 | characters with the cap character:

print(f'{var:|^0}:')

The : character at the end of the string is a pass-through.

A colon after a variable begins a formatting specification:

from datetime import datetime

now: datetime = datetime.now()

print(f'{now:%d.%m.%y(%H:%M:%D)})

Function return Not None

Returning 0 on error can be confused with the number 0 as a valid response.

So to avoid the confusion, return the Python reserved word “None”:

result = safe_square_root(4)

<strong>if result is not None:</strong> # happy path:

value = result.pop() # pop up from stack.

print(value)

else: # notice we're not checking for None.

# calling function does not need to handle error:

# an error occurred, but encapsulated to be forwarded and processed upstream:

print("unable to compute square root")

Function:

def safe_square_root(x):

try:

return [math.sqrt(x)] # in a stack.

except ValueError:

return None # using reserved word.

The parameter (x) is what is declared going into the function.

The value passed through when calling the function is called an argument.

Operators

DEFINITION: Walrun operator :=

Floor division Operators

This feature was added in Python 3.

11 // 5 uses “floor division” to return just the integer (integral part) of 2, discarding the remainder. This can be useful to efficiently solve the “Prefix Sums CountDiv” coding interview challenge: “Write a function … that, given three integers A, B and K, returns the number of integers within the range [A..B] that are divisible by K”:

def solution(a, b, k):

return 0 if b == 0 else int(b // k - (a - 1) // k)

Instead of a “brute force” approach which has linear time complexity — O(n), the solution using floor division is constant time - O(1).

Modulo operator

11 % 5 uses the (percent sign), the modulo operator to divide 11 by the quotient 5 in order to return 1 because two 5s can go into 11, leaving 1 left over, the remainder. Modulus is used in circular buffers and hashing algorithms.

def solution(A, K):

# A is the array.

# K is the increment to move.

result = [None] * len(A) # initialize result array for # items in array

for i in range(len(A)):

# Use % modulo operator to calculate new index position 0 - 9:

result[(i + K) % len(A)] = A[i]

print(f'i={i} A[i]={A[i]} K={K} result={result} ')

return result

print(solution([7, 2, 8, 3, 5], 2))

Modulu is also used in this

What Day and Time is it?

The ISO 8601 format contains 6-digit microseconds (“123456”) and a Time Zone offset (“-5.00” being five hours West of UTC):

- 2025-12-22T07:53:19.123456-05:00

# import datetime

start = datetime.datetime.now()

# do some stuff ...

end = datetime.datetime.now()

elapsed = end - start

print(elapsed)

# or

print(elapsed.seconds,":",elapsed.microseconds)

Some prefer to display local time with a Time Zone code from Python package pytz or zulu.

PROTIP: Servers within enterprises and military run in UTC time and Logs should be output in UTC time rather than local time,

- 2024-02-22T12:53:19.123456

datetime.datetime.now() provides microsecond precision:

References:

- https://www.geeksforgeeks.org/get-current-time-in-different-timezone-using-python/

Timezone handling

During Debian OS 12 install from iso file, a time zone is requested to be manually selected. After boot-up:

- Check the current timezone with bash timedatectl

- Set the timezone to UTC with bash sudo timedatectl set-timezone Etc/UTC Alternately, reconfigure the timezone data with bash sudo dpkg-reconfigure tzdata then select “None of the above” from the Continents list, then select “UTC” from the second list: Follow the prompts to navigate through the menus and select Etc or None of the above, then choose UTC.

NOTE: On macOS, timezone data are in a binary file at /etc/localtime.

Within Python, there are several ways to detect time zone:

from dateutil import tz

local_timezone = tz.tzlocal()

print("dateutil local_timezone=",local_timezone)

Use the dateutil library to read /etc/localtime and get the timezone-aware datetime object:

from datetime import datetime

local_now = datetime.now().astimezone()

local_timezone = local_now.tzinfo

print("zoneinfo local_timezone=",local_timezone)

from zoneinfo import ZoneInfo

from datetime import datetime

local_timezone = datetime.now(ZoneInfo("localtime")).tzinfo

print("zoneinfo local_timezone=",local_timezone)

Use the tzlocal library to obtain the IANA time zone name (e.g., ‘America/New_York’). But it varies across operating systems.

import tzlocal

local_timezone = tzlocal.get_localzone_name()

print("tzlocal local_timezone=",local_timezone)

Once a datetime has a tzinfo, the astimezone() strategy supplants new tzinfo

# astimezone() defaults to the local time zone when no argument is provided.

from datetime import datetime

local_now = datetime.now().astimezone()

local_timezone = local_now.tzinfo

print("astimezone local_timezone=",local_timezone)

Timing Attacks

A malicious use of precise microseconds timing code is used by Timing Attacks based on the time it takes for an application to authenticate a password to determine the algorithm used to process the password. In the case of Keyczar vulnerability found by Nate Lawson, a simple break-on-inequality algorithm was used to compare a candidate HMAC digest with the calculated digest. A value which shares no bytes in common with the secret digest returns immediately; a value which shares the first 15 bytes will return 15 compares later.

Similarly, PDF: entropy

PROTIP: Use the secrets.compare_digest module (introduced in Python 3.5) to check passwords and other private values. It uses a constant amount of time to process every request.

Functions hmac.compare_digest() and secrets.compare_digest() are designed to mitigate against timing attacks.

http://pypi.python.org/pypi/profilehooks

REMEMBER: Depth-First Seach (DFS) uses a stack, whereas Breadth-First Search (BFS) use a queue.

VIDEO: The Sliding Window

VIDEO: FullStack’s REACTO framework during coding interviews:

- Repeat the question

- Examples

- Approach

- Code

- Test

- Optimization

Run Duration calculations

Several packages, functions, and methods are available. They differ by:

- the type of duration they report: wall-clock time or CPU time

- how they treat time zone changes during the recording period

-

how much precision they report (down to microseconds)

-

Wall-clock time (aka clock time or wall time) is the total time elapsed you can measure with a stopwatch. It is the difference between the time at which a program finished its execution and the time at which the program started. It includes waiting time for resources.

- CPU Time is how much time the CPU was busy processing programming instructions, not including time waiting for other task to complete (like I/O operations).

We want both reported.

timeit.default_timer() is time.perf_counter() on Python 3.3+.

The same program run several times would report similar CPU time but varying wall-clock times due to differences in what else was taking up resources during the runs.

- time.time() returns wall-clock time.

- time.process_time() returns CPU execution time.

To time the difference between calculation strategies, new since Python 3.7 is PEP 564.

time.perf_counter() (abbreviation of performance counter) measures the elapsed time of short duration because it returns 82 nano-second resolution on Fedora 4.12. It is based on Wall-Clock Time which includes time elapsed during sleep and is system-wide. The reference point of the returned value is undefined, so that only the difference between the results of consecutive calls is valid. See https://docs.python.org/3/library/time.html#time.perf_counter

time.clock is no longer available since Python 3.8.

time.time() has a resolution of whole seconds. And in a measurement period between start and stop times, if the system time is disrupted (such as for daylight savings) its counting is disrupted. time.time() resolution will only become larger (worse) as years pass since every day adds 86,400,000,000,000 nanoseconds to the system clock, which increases the precision loss. It is called “non-monotonic” because falling back on daylight savings would cause it to report time going backwards:

start_time = time.time()

# your code

e = time.time() - start_time

time.strftime("%H:%M:%S", time.gmtime(e)) # for hours:minutes:seconds

print('{:02d}:{:02d}:{:02d}'.format(e // 3600, (e % 3600 // 60), e % 60))

timeit()

For more accurate wall-time capture, the timeit() functions disable the garbage collector.

timeit.timer() provides a nice output format of 0:00:01.946339 for almost 2 seconds.

- https://pynative.com/python-get-execution-time-of-program/

- https://docs.python.org/3/library/timeit.html

- https://www.guru99.com/timeit-python-examples.html

import timeit # built-in

# print addition of first 1 million numbers

def addition():

print('Addition:', sum(range(1000000)))

# run same code 5 times to get measurable data

n = 5

# calculate total execution time

result = timeit.timeit(stmt='addition()', globals=globals(), number=n)

# calculate the execution time

# get the average execution time

print(f"Execution time is {result / n} seconds")

timeit.timeit(stmt='pass', setup='pass', timer=<default timer>, number=1000000, globals=None)

# from timeit import default_timer as timer # from datetime import timedelta start = timer() # do some stuff ... end = timer() print(timedelta(seconds=end-start))

PEP-418 in Python 3.3 added three timers:

time.process_time() offers 1 nano-second resolution on Linux 4.12. It does not include time during sleep.

# import time t = time.process_time() # do some stuff ... elapsed_time = time.process_time() - t

time.monotonic() is used for measurements on the order of hours/days, when you don’t care about sub-second resolution. It has 81 ns resolution on Fedora 4.12. BTW “monotonic” = only goes forward. See https://docs.python.org/3/library/time.html#time.monotonic

References:

- https://stackoverflow.com/questions/7370801/how-to-measure-elapsed-time-in-python

- https://stackoverflow.com/questions/3620943/measuring-elapsed-time-with-the-time-module/47637891#47637891

- See https://docs.python.org/3/library/datetime.html#strftime-and-strptime-format-codes

- See https://www.codingeek.com/tutorials/python/datetime-strftime/

- use the .st_birthtime attribute of the result of a call to os.stat().

- Obtain pgm start to obtain run duration at end:

- See https://www.webucator.com/article/python-clocks-explained/

- for wall-clock time (includes any sleep).

Pickle objects

Pickling is the process of converting (serializing) a (especially complex) Python object (list, dict, set, tuple, matrix) into a byte stream used to transfer to another object, over the internet, or store in a database.

- dump writes result to a file

-

load objects from a file

- dumps in-memory object to a file

- loads from file to in-memory objects

https://www.youtube.com/watch?v=wO_gVvINtg0

minmax

The difference between API, Library, Package, Module, Script, Frameworks.

A library contains several modules which are separated by its use.

http://docs.python.org/3/reference/import.html

A module is a bunch of related code saved in a file with the extension .py. Code in a module can be functions, classes, or variables.

The most popular imports include system, time, random, datetime, argparse, re (regular expressions), math, xarray, polars (for computation), seaborn (charts with themes) on top of matplatlib, pytorch, pygame, result (exception handling), pydantic (data validation), missingno, sqlmodel (ORM fastapi), beautifulsoup, python-dotenv (key value pairs in environment variables).

Packages can also contain modules and other packages (subpackages).

Packages structure Python’s module namespace by using “dotted module names”.

The ___ VScode extension squences and reformats import statements to save memory. If the program only needs a single function, only that would be imported in.

Django, Flask, Bottle are frameworks - that provide the basic flow and architecture of the application.

def celsius_to_fahrenheit(celsius):

return (celsius * 9/5) + 32

try:

celsius = float(input("Enter temperature in Celsius: "))

fahrenheit = celsius_to_fahrenheit(celsius)

print(f"{celsius}°C is equal to {fahrenheit:.2f}°F")

f"{fahrenheit:.2f}"

round(fahrenheit, 2)

except ValueError:

print("Please enter a valid number for the temperature.")

Swapping

To swap values, here’s a straight-forward function:

def swap1(var1,var2):

var1,var2 = var2,var1

return var1, var2

>>> swap1(10,20) >>> 2 1

def swap2(x,y):

x = x ^ y

y = x ^ y

x = x ^ y

return x, y

>>> swap2(10,20) (20,10)

Sorting

Challenges:

Implement Binary Search and Quick Sort

Reduce Space Complexity with Dynamic programming

Techniques for calculation of nested loops is often used to shown how to reduce run times by using techniques that use more memory space. Rather than “brute-force” repeatitive computations as in the definition of how to calculate Fibonacci numbers, which by definition is based on numbers preceding it.

fib(5) = fib(4) + fib(3)

Fibonacci has the highest BigO because it uses recursive execution with Python generators. VIDEO

Memoization (sounds like memorization) is the technique of writing a function that remembers the results of previous computations.

Longest Increasing Subsequence (LIS)

That’s a technique of “Dynamic Programming” (See https://www.wikiwand.com/en/Dynamic_programming)

Dynamic programming is a catch phrase for solutions based on solving successively similar but smaller problems, using algorithmic tasks in which the solution of a bigger problem is relatively easy to find, if we have solutions for its sub-problems.

Avoid divide by zero errors

Use this in every division to ensure that a zero denominator results in falling into “else 0” rather than a “ZeroDivisionError” at run-time:

def weird_division(n, d):

# n=numerator, d=denominator.

return n / d if d else 0

Random

Flip a coin:

import random

if random.randint(0, 1) == 0:

print("heads!")

else:

print("tails!")

TODO: Roll a 6-sided die? See bomonike/memon

TODO: Roll a 20-sided die?

Environment Variable Cleansing

To read a file named “.env” at the $HOME folder, and obtain the value from “MY_EMAIL”:

import os

env_vars = !cat ~/.env

for var in env_vars:

key, value = var.split('=')

os.environ[key] = value

print(os.environ.get('MY_EMAIL')) # containing "johndoe@gmail.com"

This code is important because it keeps secrets in your $HOME folder, away from folders that get pushed up to GitHub.

There is the “load_dotenv” package that can do the above, but using native commands mean less exposure to potential attacks.

Remember that attackers can use directory traversal sequences (../) to fetch the sensitive files from the server.

Sanitize the user input using “shlex”

Object-oriented class functions

To use .maketrans() an d .translate()

- a.find(‘a’) returns the index where ‘a’ is found.

BTW not everyone is enamored with Object-Oriented Programming (OOP). Yegor Bugayenko in Russia recorded “The Pain of OOP” lectures “Algorithms hurt object thinking” May 2023 and #2 Static methods and attributes are evil, a repeat of his 11 March 2020: #1: Algorithms and Lecture #2: Static methods and attributes are evil. His 2016 ElegantObjects.org presents an object-oriented programming paradigm that “renounces traditional techniques like null, getters-and-setters, code in constructors, mutable objects, static methods, annotations, type casting, implementation inheritance, data objects, etc.”

Blob vs. File vs. Text

A “BLOB” (Binary Large OBject) is a data type that stores binary data such as mp4 videos, mp3 audio, pictures, pdf. So usually large – up to 2 TB (2,147,483,647 characters).

https://github.com/googleapis/google-cloud-python/issues/1216

https://towardsdatascience.com/image-processing-blob-detection-204dc6428dd

GUI

https://docs.python.org/3/using/ios.html

Not many develop iOS and iPad apps using Python vs. coding Swift, which is similar to Python. Learning Swift to develop an iOS application would be easier than figuring out how to develop an iOS application in Python.

But if you are hell-bent on it:

- Pythonista

- wxWidgets

- Kivy

- ReactNative JavaScript

- Xamarin (Microsoft) coding in C#, etc.

- beeware.org framework

-

VIDEO: Qt for cross-platform development in Python over C++. Nokia owned Qt and developed PySide for Python bindings. PySide2 arrived later than Riverbank’s PyQt5 under GPL (buy license to keep code close source).

PySide6 and PyQt5 released about the same time.

PySide6 is under LGPL with no sharing. In PySide6, every widget is part of two distinct hierarchies:

- the Python object hierarchy and

- the Qt layout hierarchy. How you respond or ignore events can affect how your UI behaves.

VIDEO: Qt Media player

create-gui-applications-pyside6.epub

https://github.com/mfitzp/books/tree/main/create-gui-applications/pyside6

More advanced developers integrate Python directly into an iOS project using a Python XCFramework.

Cloud

Azure storage

https://github.com/yokawasa/azure-functions-python-samples

https://chriskingdon.com/2020/11/24/the-definitive-guide-to-azure-functions-in-python-part-1/

https://chriskingdon.com/2020/11/30/the-definitive-guide-to-azure-functions-in-python-part-2-unit-testing/

https://github.com/Azure/azure-storage-python/blob/master/tests/blob/test_blob_storage_account.py

https://docs.microsoft.com/en-us/azure/storage/blobs/storage-quickstart-blobs-python

Azure Blobs

NOTE: Update of azure-storage-blob deprecates blockblobservice.

VIDEO: https://pypi.org/project/azure-storage-blob/

https://www.educative.io/edpresso/how-to-download-files-from-azure-blob-storage-using-python

https://github.com/Azure/azure-sdk-for-python/issues/12744 exists() new feature

import asyncio

async def check():

from azure.storage.blob.aio import BlobClient

blob = BlobClient.from_connection_string(conn_str="my_connection_string", container_name="mycontainer", blob_name="myblob")

async with blob:

exists = await blob.exists()

print(exists)

Azure Streams

https://blog.siliconvalve.com/2020/10/29/reading-and-writing-binary-files-with-python-with-azure-functions-input-and-output-bindings/ Reading and writing binary files with Python with Azure Functions input and output bindings

Web Scraper

Beautiful Soup

Movie Recommender

A popular project is to combine from Kagle a historical database of movies and TV shows from several streaming sites:

- Disney+

- Netflix

- Hulu

- Amazon Prime

https://github.com/dataquestio/project-walkthroughs/blob/master/movie_recs/movie_recommendations.ipynb https://files.grouplens.org/datasets/movielens/ml-25m.zip

My rudimentry show-recommendations.py makes recommendations based on identifying atrributes of a single movie and showing others with the same attributes. https://www.youtube.com/watch?v=eyEabQRBMQA

It uses imports numpy and pandas for data handling.

Another advancement is to use the SurPRISE library (https://surpriselib.com/), named from the acronym Simple Python RecommendatIon System Engine. VIDEO

surprise -h

A module that was compiled using NumPy 1.x cannot be run in NumPy 2.0.0 as it may crash. To support both 1.x and 2.x versions of NumPy, modules must be compiled with NumPy 2.0. Some module may need to rebuild instead e.g. with ‘pybind11>=2.12’.

If you are a user of the module, the easiest solution will be to downgrade to ‘numpy<2’ or try to upgrade the affected module. We expect that some modules will need time to support NumPy 2.

An advancement is Movielens (https://grouplens.org/datasets/movielens/) https://grouplens.org/datasets/movielens/ The load_builtin() method will offer to download the movielens-100k dataset if it has not already been downloaded, and it will save it in the .surprise_data folder in your home directory (you can also choose to save it somewhere else).

Surprise is a “scikit” (https://projects.scipy.org/scikits.html) which enables you to build your own cross-validation recommendation algorithm as well as use ready-to-use prediction algorithms such as:

- baseline algorithms,

- neighborhood methods,

- similarity measures (cosine,

Matrix factorization-based algorithms are used for collaborative filtering within recommender systems. The algorithms aim decompose a large user-item interaction matrix into smaller matrices that capture latent factors. The four common matrix factorization algorithms are SVD, PMF, SVD++, NMF:

SVD (Singular Value Decomposition) decomposes the user-item matrix into three lower-dimensional matrices:

- U to represent user factors

- V^T to represent item factors

- Σ to contain singular values

When applied to collaborative filtering, SVD aims to minimize the sum of squared errors between predicted and actual ratings for observed entries in the rating matrix.

QUESTION: The prediction for a user-item pair is calculated as: r̂ui = μ + bu + bi + qi^T * pu Where μ is the overall mean rating, bu and bi are user and item biases, and qi and pu are item and user factor vectors.

SVD++ extends SVD to incorporate both implicit and explicit ratings and implicit feedback (e.g., which items a user has rated). The prediction formula for SVD++ is:

| r̂ui = μ + bu + bi + qi^T * (pu + | N(u) | ^(-1/2) * Σj∈N(u)yj) Where N(u) represents the set of items rated by user u, and yj are item factors that capture implicit feedback. |

PMF (Probabilistic Matrix Factorization) is a model-based technique that assumes ratings are generated from a Gaussian (normal) distribution. So it factorizes the user-item matrix R into two lower-dimensional matrices: U (user factors) and V (item factors). PMF is particularly effective for large, sparse datasets and scales linearly with the number of observations.

NMF (Non-negative Matrix Factorization) factorizes a non-negative matrix V into two non-negative matrices W and H

V ≈ W * H^T Where V is the user-item rating matrix, W represents user factors, and H represents item factors. The non-negativity constraint in NMF often leads to more interpretable and sparse decompositions compared to other techniques. Key advantages of NMF include:

Reduced prediction errors compared to techniques like SVD when non-negativity is imposed

Ability to work with compressed dimensional models, speeding up clustering and data organization Automatic extraction of sparse and significant features from non-negative data vectors

These matrix factorization algorithms have proven to be effective in capturing latent factors and similarities between users and items, making them powerful tools for building recommender systems. The choice of algorithm depends on the specific requirements of the application, such as dataset characteristics, computational resources, and desired interpretability of the results.

To evaluate the performance of regression models and recommender systemsusing Singular Value Decomposition (SVD):

Evaluating RMSE, MAE of algorithm SVD on 5 split(s).

Fold 1 Fold 2 Fold 3 Fold 4 Fold 5 Mean Std

RMSE 0.9311 0.9370 0.9320 0.9317 0.9391 0.9342 0.0032

MAE 0.7350 0.7375 0.7341 0.7342 0.7375 0.7357 0.0015

Fit time 6.53 7.11 7.23 7.15 3.99 6.40 1.23

Test time 0.26 0.26 0.25 0.15 0.13 0.21 0.06

Lower RMSE and MAE values indicate better predictive accuracy.

RMSE (Root Mean Square Error) is calculated as the square root of the average of squared differences between predicted and actual values. It gives higher weight to larger errors, making it more sensitive to outliers. The formula for RMSE is:

RMSE = √(Σ(predicted - actual)^2 / n)

MAE (Mean Absolute Error) is the average of the absolute differences between predicted and actual values. It treats all errors equally, regardless of their magnitude. The formula for MAE is:

| MAE = Σ | predicted - actual | / n |

RMSE is more sensitive to large errors, while MAE provides a more intuitive measure of average error magnitude.

GCP

https://gcloud.readthedocs.io/en/latest/storage-blobs.html

https://cloud.google.com/appengine/docs/standard/python/blobstore

OpenCV

A mobile app that recognizes your hand pattern to play the Rock Paper Sissors plus Spock Lizard.

Use AI to guess what you will do next.

A mobile app that recognizes your hand pattern to play the Rock Paper Sissors plus Spock Lizard.

Use AI to guess what you will do next.

A macOS app that runs constantly to sound an alert if someone is looking over your shoulders.

https://learnopencv.com/blob-detection-using-opencv-python-c/

Scikit-Image

https://towardsdatascience.com/image-processing-with-python-blob-detection-using-scikit-image-5df9a8380ade

GIS

https://gsp.humboldt.edu/olm/Courses/GSP_318/11_B_91_Blob.html

String Handling

Regular Expressions

import re

- https://www.tutorialspoint.com/python/python_reg_expressions.htm

- https://www.udemy.com/course/python-quiz/learn/quiz/4649042#overview within quiz

Handle Strings safely

Python has four different ways to format strings.

Using f-strings to format (potentially malicious) user-supplied strings can be exploited:

from string import Template

greeting_template = Template("Hello World, my name is $name.")

greeting = greeting_template.substitute(name="Hayley")

So use a way that’s less flexible with types and doesn’t evaluate Python statements.

Data Types

In Python 2, there was an internal limit to how large an integer value could be: 2^63 - 1.

But that limit was removed in Python 3. So there now is no explicitly defined limit, but the amount of available address space forms a practical limit depending on the machine Python runs on. 64-bit

0xa5 (two character bits) represents a hexdidecimal number

3.2e-12 expresses as a a constant exponential value.

https://docs.python.org/3/tutorial/introduction.html#lists

Slicing strings

For flexibility with alternative languages such as Cyrillic (Russian) character set, return just the first 3 characters of a string:

letters = "abcdef" first_part = letters[:3]

Unicode Superscript & Subscript characters

# Specify Unicode characters:

# superscript

print("x\u00b2 + y\u00b2 = 2") # x² + y² = 2

# subscript

print(u'H\u2082SO\u2084') # H₂SO₄

Superscript

# super-sub-script.py converts to superscript:

def conv_superscript(x):

normal = "ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz0123456789+-=()"

super_s = "ᴬᴮᶜᴰᴱᶠᴳᴴᴵᴶᴷᴸᴹᴺᴼᴾᴾᴿˢᵀᵁⱽᵂˣʸᶻᵃᵇᶜᵈᵉᶠᵍʰᶦʲᵏˡᵐⁿᵒᵖ۹ʳˢᵗᵘᵛʷˣʸᶻ⁰¹²³⁴⁵⁶⁷⁸⁹⁺⁻⁼⁽⁾"

res = x.maketrans(''.join(normal), ''.join(super_s))

return x.translate(res)

print(conv_superscript('Convert all this2'))

# Or you can simply copy the text

Functions

Internationalization & Localization (I18N & L18N)

- BLOG

- VIDEO: Internationalization and localization in Web Applications by James Cutajar

Internationalization, aka i18n for the 18 characters between i and n, is the process of adapting coding to support various linguistic and cultural settings:

- date and time zone calculations

- numbers and currency

- Pluralization

-

Install

pip install gettext

NOTE: pip is a recursive acronym that stands for either “Pip Installs Packages” or “Pip Installs Python”.

-

Create a folder for each locale in the ./locale folder.

-

Use Lokalise utility to manage translations through a GUI. It also has a CLI tool to automate the process of managing translations. https://lokalise.com/blog/lokalise-apiv2-in-practice/

locales/ ├── el │ └── LC_MESSAGES │ └── base.po └── en └── LC_MESSAGES └── base.po -

Add the library

import gettext # Set the local directory localedir = './locale' # Set up your magic function translate = gettext.translation('appname', localedir, fallback=True) _ = translate.gettext # Translate message print(_("Hello World"))See https://phrase.com/blog/posts/translate-python-gnu-gettext/

-

Store a master list of locales supported in a Portable Object Template (POT) file, also known as a translator:

#: src/main.py:12 msgid "Hello World" msgstr "Translation in different language">>> unicode_string = u"Fuu00dfbu00e4lle" >>> unicode_string Fußbälle >>> type(unicode_string) <type 'unicode'> >>> utf8_string = unicode_string.encode("utf-8") >>> utf8_string 'Fuxc3x9fbxc3xa4lle' >>> type(utf8_string) <type 'str'>

# ALTERNATIVE: TODO: http://babel.pocoo.org/en/latest/numbers.html

#from babel import numbers

# numbers.format_decimal(.2345, locale='en_US')

# Internationalization: http://babel.pocoo.org/en/latest/dates.html

# Requires: pip install Babel

# from babel import Locale

# NOTE: Babel generally recommends storing time in naive datetime, and treat them as UTC.

# from babel.dates import format_date, format_datetime, format_time

# d = date(2007, 4, 1)

# format_date(d, locale='en') # u'Apr 1, 2007'

# format_date(d, locale='de_DE') # u'01.04.2007'

Switch language in browsers

Ensure that your program works correctly when another human language (such as “es” for Spanish, “ko” for Korean, “de” for German, etc.) is configured by the user:

A. English was selected in browser’s Preferences, but the app displays another language.

B. Another language was selected in browser’s preferences, and the app displays that language.

To simulate selecting another language in the browser’s Preferences in Firefox:

FirefoxOptions options = new FirefoxOptions();

options.addPreference("intl.accept_languages", language);

driver = new FirefoxDriver(options);

Alternately, in Chrome:

HashMap<String, Object> chromePrefs = new HashMap<String, Object>();

chromePrefs.put("intl.accept_languages", language);

ChromeOptions options = new ChromeOptions();

options.setExperimentalOption("prefs", chromePrefs);

driver = new ChromeDriver(options);

Version management

-

To create a requirements.txt file containing the latest versions:

pip freeze > requirements.txt -

Identify whether CVEs have been filed against each module in requirements.txt:

sbom ???

If you’re writing a library that you intend to distribute and use in many places (or to be used by many people), the standard approach is to write a setup.py package manifest, and in the install_requires argument of setup() declare your dependencies. You should declare only direct dependencies, and declare the range of versions your library is compatible with.

If you’ve built something that you want to deploy, or otherwise reproduce as an environment somewhere else, the standard approach is to create a requirements file containing the full (direct and transitive) dependency tree, pinned to exact versions, with package hashes included. You can do this by writing a script that strings together several pip commands, or by using the pre-made “pip-compile” script from the pip-tools project.

This pyproject.toml file will work with modern versions of setuptools (61.0 and above). It replaces the need for a separate setup.py or setup.cfg file in many cases. However, if you need more complex build configurations or have custom build steps, you may still need to use a setup.py file alongside pyproject.toml.

Remember to adjust the content according to your specific project requirements. The pyproject.toml file is designed to be human-readable and writable, making it easier to manage your project’s metadata and build configuration.

PROTIP: I’ve found Poetry to be difficult to debug https://install.python-poetry.org:

brew install poetry

-

Verify:

poetry --versionExpected response like:

Poetry (version 1.8.3) -

Initialize to be prompted to create a pyproject.toml file:

poetry init -

Run based on the pyproject.toml

poetry add requests --no-interaction poetry update requests -

Run based on the pyproject.toml

poetry export -f requirements.txt --output requirements.txtInstead of

[build-system] requires = ["setuptools>=61.0"] build-backend = "setuptools.build_meta"

Excel handling using Dictionary object

Alternately, the Python library to work with Excel spreadsheets translates between Excel cell addresses (such as “A1”) and zero-based Python array tuple:

str = xl_rowcol_to_cell(0, 0, row_abs=True, col_abs=True) # $A$1

(row, col) = xl_cell_to_rowcol('A1') # (0, 0)

column = xl_col_to_name(1, True) # $B

However, if you want to avoid adding a dependency, this function defines a dictionary to convert an Excel column number to a number:*

def letter_to_number(letters):

letters = letters.lower()

dictionary = {'a':1,'b':2,'c':3,'d':4,'e':5,'f':6,'g':7,'h':8,'i':9,'j':10,'k':11,'l':12,'m':13,'n':14,'o':15,'p':16,'q':17,'r':18,'s':19,'t':20,'u':21,'v':22,'w':23,'x':24,'y':25,'z':26}

strlen = len(letters)

if strlen == 1:

number = dictionary[letters]

elif strlen == 2:

first_letter = letters[0]

first_number = dictionary[first_letter]

second_letter = letters[1]

second_number = dictionary[second_letter]

number = (first_number * 26) + second_number

elif strlen == 3:

first_letter = letters[0]

first_number = dictionary[first_letter]

second_letter = letters[1]

second_number = dictionary[second_letter]

third_letter = letters[2]

third_number = dictionary[third_letter]

number = (first_number * 26 * 26) + (second_number * 26) + third_number

return number

REMEMBER: Square brackets are used to reference by value.

Instead of defining a dictionary, you can use a property of the ASCII character set, in that the Latin alphabet begins from its 65th position for “A” and its 97th character for “a”, obtained using the ordinal function:

ord('a') # returns 97

ord('A') # returns 65

This returns ‘a’ :

chr(97)

More dictionaries:

# Eastern European countries: SyntaxError: invalid character in identifier

ee_countries={"Ukraine": "43.7M", "Russia": "143.8M", "Poland": "38.1M", "Romania": "19.5M", "Bulgaria": "6.9M", "Hungary": "9.6M", "Moldova": "4.1M"}

float(ee_countries["Moldova"].rstrip("M")) # 4.1

ee_countries.get("Moldova") # 4.1M

len(ee_countries.items()) # 7 are immutable in dictionary

min(ee_countries.items()) # ('Bulgaria', '6.9M') the smallest country

max(ee_countries.values()) # largest country = 9.6M ?

max(ee_countries.keys()) # largest key length = Ukraine

sorted(ee_countries.keys(),reverse=True) # ['Ukraine', 'Russia', 'Romania', 'Poland', 'Lithuania', 'Latvia', 'Hungary', 'Bulgaria']

del ee_countries["Estonia"]

ee_countries.pop["Bulgaria"]

ee_countries["Latvia"] = "1.9M"

ee_countries.update[['Lithuania', '2.8M'],['Belarus' , '9.4M']]

ee_countries.popitem() # remove item last added

len(ee_countries.items()) # 8 are immutable in dictionary

ee_countries["Bulgaria"]="7M"

ee2=ee_countries.copy()

ee_countries.clear() # remove all

print(ee_countries) # {} means empty

https://www.codesansar.com/python-programming-examples/sorting-dictionary-value.htm

File open() modes

The Python runtime does not enforce type annotations introduced with Python version 3.5. But type checkers, IDEs, linters, SASTs, and other tools can benefit from the developer being more explicit.

Use this type checker to discover when the parameter is outside the allowed set and warn you:

MODE = Literal['r', 'rb', 'w', 'wb']

def open_helper(file: str, mode: MODE) -> str:

...

open_helper('/some/path', 'r') # Passes type check

open_helper('/other/path', 'typo') # Error in type checker

BTW Literal[…] was introduced with version 3.8 and is not enforced by the runtime (you can pass whatever string you want in our example).

PROTIP: Be explicit about using text (vs. binary) mode.

with open("D:\\myfile.txt", "w") as myfile:

myfile.write("Hello")

| Character | Meaning |

|---|---|

| b | binary (text mode is default) |

| t | text mode (default) |

| r | read-only (the default) |

| + | open for updating (read and write) |

| w | write-only after truncating the file |

| a | append |

| a+ | opens a file for both appending and reading at the same time |

| x | open for exclusive creation, failing if file already exists |

| U | universal newlines mode (used to upgrade older code) |

myfile.write() returns the count of codepoints (characters in the string), not the number of bytes.

myfile.read(3) returns 3 line endings (\n) in string lines.

myfile.readlines() returns a list where each element of the list is a line in the file.

myfile.truncate(12) keeps the first 12 characters in the file and deletes the remainder of the file.

myfile.close() to save changes.

myfile.tell() tells the current position of the cursor.

File Copy commands

The shutil package provides fine-grained control for copying files:

import shutil

This table summarizes the differences among shutil commands:

| Dest. dir. | Copies metadata | Preserve permissions | Accepts file object | |

|---|---|---|---|---|

| shutil.copyfile | - | - | - | - |

| shutil.copyfileobj | - | - | - | Yes |

| shutil.copy | Yes | - | Yes | - |

| shutil.copy2 | Yes | Yes | Yes | - |

See https://docs.python.org/3/library/filesys.html

File Metadata

Metadata includes Last modified and Last accessed info (mtime and atime). Such information is maintained at the folder level.

For all commands, if the destination location is not writable, an IOError exception is raised.

-

To copy a file within the same folder as the source file:

shutil.copyfile(src, dst)

buffer cannot be when copying to another folder.

-

To copy a file within the same folder and buffer file-like objects (with a read or write method, such as StringIO):

shutil.copyfileobj(src, dst)

Notice both individual file copy commands do not copy over permissions from the source file. Both folder-level copy commands below carry over permissions.

CAUTION: folder-level copy commands do not buffer.

-

PROTIP: To copy a file to another folder and retain metadata:

file_src = 'source.txt' f_src = open(file_src, 'rb') file_dest = 'destination.txt' f_dest = open(file_dest, 'wb') shutil.copyfileobj(f_src, f_dest)

The destination needs to specify a full path.

-

To copy a file to another folder and NOT retain metadata:

shutil.copy2(src, "/usr", *, follow_symlinks=True)

-

You can use the operating system shell copy command, but there is the overhead of opening a pipe, system shell, or subprocess, plus poses a potential security risk.

# In Unix/Linux os.system('cp source.txt destination.txt') \# https://docs.python.org/3/library/os.html#os.system status = subprocess.call('cp source.txt destination.txt', shell=True) # In Windows os.system('copy source.txt destination.txt') status = subprocess.call('copy source.txt destination.txt', shell=True) \# https://docs.python.org/3/library/subprocess.html -

Pipe open has been deprecated. https://docs.python.org/3/library/os.html#os.popen

# In Unix/Linux os.popen('cp source.txt destination.txt') # In Windows os.popen('copy source.txt destination.txt')

Error Exception handling

Handle file not found exception : :

# if file doesn't exist in folder, create it:

import os

import sys

def make_at(path p, dir_name)

original_path = os.getcwd()

try:

os.chdir(path)

os.makedir(dir_name)

except OSError as e:

print(e, file=sys.stderr)

raise

finally: #clean-up no matter what:

os.chdir(original_path)

Operating system

There are platform-specific modules:

- Windows msvcrt (Visual C run-time)

- MacOS sys, tty, termios, etc.

To determine what operating system to wait for a keypress, use sys.platform, which has finer granularity than sys.name because it uses uname:

# https://docs.python.org/library/sys.html#sys.platform

from sys import platform

if platform == "linux" or platform == "linux2":

# linux

elif platform == "darwin":

# MacOS

elif platform == "win32":

# Windows

elif platform == "cygwin":

# Windows running cygwin Linux emulator

http://code.google.com/p/psutil/ to do more in-depth research.

PROTIP: This is an example of Python code issuing a Linux operating system command:

if run("which python3").find("venv") == -1:

# something when not executed from venv

SECURITY PROTIP: Avoid using the built-in Python function “eval” to execute a string. There are no controls to that operation, allowing malicious code to be executed without limits in the context of the user that loaded the interpreter (really dangerous):

import sys

import os

try:

eval("__import__('os').system('clear')", {})

#eval("__import__('os').system(cls')", {})

print "Module OS loaded by eval"

except Exception as e:

print repr(e)

Command generator

Create custom CLI commands by parsing a command help text into cli code that implements it.

Brilliant.

See docopt from https://github.com/docopt/docopt described at http://docopt.org

CLI code enhancement

Python’s built-in mechinism for coding Command-line menus, etc. is difficult to understand. So some have offered alternatives:

- cement - CLI Application Framework for Python.

- click - A package for creating beautiful command line interfaces in a composable way.

- cliff - A framework for creating command-line programs with multi-level commands.

- docopt - Pythonic command line arguments parser.

- python-fire - A library for creating command line interfaces from absolutely any Python object.

- python-prompt-toolkit - A library for building powerful interactive command lines.

Handling Arguments

For parsing parameters supplied by invoking a Python program, the command-line arguments and options/flags:

- python myprogram.py -v -LOG=info

The argparse package comes with Python 3.2+ (and the optparse package that comes with Python 2), it’s difficult to understand and limited in functionality.

https://www.geeksforgeeks.org/argparse-vs-docopt-vs-click-comparing-python-command-line-parsing-libraries/

Alternatives: to Argparse are Docopt, Click, Client, argh, and many more.

Instead, Dan Bader recommends the use of click.pocoo.org/6/why click custom package (from Armin Ronacher).

Click is a Command Line Interface Creation Kit for arbitrary nesting of commands, automatic help page generation. It supports lazy loading of subcommands at runtime. It comes with common helpers (getting terminal dimensions, ANSI colors, fetching direct keyboard input, screen clearing, finding config paths, launching apps and editors, etc.)

Click provides decorators which makes reading of code very easy.

The “@click.command()” :

\# cli.py

import click

@click.command()

def main():

print("I'm a beautiful CLI ✨")

if __name__ == "__main__":

main()

Python in the Cloud

On AWS:

Tutorials:

- Intro to Boto3

- https://linuxacademy.com/howtoguides/posts/show/topic/14209-automating-aws-with-python-and-boto3 has a whole video course

- Python, Boto3, and AWS S3: Demystified by Ralu Bolovan

- DataCamp’s intro to AWS and Boto3 VIDEO

- Johnny Chiver’s Beginner’s Guide makes use of Cloud9 in his main.py:

import boto3

s3_client = boto3.client('s3')

s3_client.create_bucket(Bucket="johnny-chivers-test-1-boto", CreateBucketConfiguration={'LocationConstraint':'eu-west-1'})

response = s3_client.list_buckets()

print(response)

- Sandip Das’s Boto3 with CI CD

- Automating AWS IAM user creation by Prashant Kakhera

- AWS has App Runner Overview

Hands-on lab

On Azure:

- Microsoft Azure Overview: Introduction series by Alex at Sigma Coding references https://github.com/areed1192/azure-sql-data-project covers Azure (Serverless) Functions in Python

- https://docs.microsoft.com/python/azure/

- https://azure.microsoft.com/resources/samples/?platform=python

- https://github.com/Azure/azure-sdk-for-python/wiki/Contributing-to-the-tests

- https://azure.microsoft.com/en-us/support/community/

- https://portal.azure.com/

- Sign in

- https://portal.azure.com/#view/Microsoft_Azure_Billing/SubscriptionsBlade

-

https://aka.ms/azsdk/python/all lists available packages.

pip install azure has been deprecated from https://github.com/Azure/azure-sdk-for-python/pulls

New Program Authorization

PROTIP: Each Azure services have different authenticate.

-

Install Azure CLI for MacOS:

brew install azure-cli

https://www.cbtnuggets.com/it-training/skills/python3-azure-python-sdk by Michael Levan https://www.youtube.com/watch?v=we1pcMRQwD8

from azure.cli.core import get_default_cli as azcli # Instead of > az vm list -g Dev2 azcli().invoke(['vm','list','-g', 'Dev2'])

###

Using Digital Blueprints with Terraform and Microsoft Azure

Sets: Day of week Set handling

set([3,2,3,1,5]) # auto-renumbers with duplicates removed

day_of_week_en = ["Sun","Mon","Tue","Wed","Thu","Fri","Sat"]

day_of_week_en.append("Luv")

days_in_week=len(day_of_week_en)

print(f"{days_in_week} days a week" )

print(day_of_week_en)

x=0

for index in range(8):

print("{0}={1}".format(day_of_week_en[x],x))

x += 1

Lists

Use a list instead for a collection of similar objects.

Prefix what to print with an asterisk so it is passed as separate values so a space is added in between each value.

li = [10, 20, 30, 40, 50] li = list(map(int, input().split())) print(*li)

Tuples

Values are passed to a function with a single variable. So to multiple values of various types to or from a function, we use a tuple - a fixed-sized collection of related items (akin to a “struct” in Java or “record”).

PROTIP: When adding a single value, include a comma at the end to avoid it being classified as a string:

-

REMEMBER: When storing a single value in a Tuple, the comma at the end makes it not be classified as a string:

mytuple=(50,) type(mytuple)

<class 'tuple'>

-

Store several items in a single variable:

person = ('john', 'doe', 40) (a, b, c) = person person a person[0::2] # every 2 from 2nd item = ('john', 40) person.index(40) # index of item containing 40 = 2

Range

range object and property-based unit testing.

myrange=range(3) type(myrange) myrange # range(0, 3) print(myrange) # range(0, 3) list(myrange) # [0, 1, 2] from zero myrange=range(1,5) list(myrange) # [1, 2, 3, 4] # excluding 5! myrange=range(3,15,2) list(myrange) # [3, 5, 7, 9, 11, 13] # skip every 2 list(myrange)[2] # 7 print( range(5,15,4)[::-1] ) # range(13, 1, -4)

<class ‘range’>

List comprehension

squares = [x * x for x in range(10)]

would output:

[0, 1, 4, 9, 16, 25, 36, 49, 64, 81]

Classes and Objects

- https://www.learnpython.org/en/Classes_and_Objects

- https://app.pluralsight.com/library/courses/core-python-classes-object-orientation

- The Playbook of code shown on 2 hr VIDEO: What Does It Take To Be An Expert At Python? by James Powell (@dontusethiscode) at the PyData conference.

Encapsulation is a software design practice of bundling the data and the methods that operate on that data.

Methods encode behavior (programmed logic) of an object and are represented by functions.

Attributes encode the state of an object and are represented by variables.

MEMONIC: Scopes: LEGB

- Local - Inside the current function

- Enclosing - Inside enclosing functions

- Global - At the top level of the module

- Built-in - In the special builtins module

Metaclasses

metaclasses: 18:50

metaclasses(explained): 40:40

Decorators

- VIDEO: Python Decorators 1: The Basics (in Jupyter notebook)

- VIDEO

- https://www.youtube.com/watch?v=yNzxXZfkLUA

- https://app.pluralsight.com/course-player?clipId=a5072421-b21f-4043-8164-e148e401492b

The string starting with “@” before a function definition

Decorators allow changes in behavior without changing the code.

Decorators take advantage of Python being live dynamically compiled.

There are limitations, though.

By default, functions within a class need to supply “self” as the first parameter.

class MyClass:

attribute = "class attribute"

...

def afunction(self,text_in):

cls.attribute = text_in

VIDEO: However, decorator @classmethod enable “cls” to be accepted as the first argument:

def afunction(self,text_in):

cls.attribute = text_in

The @classmethod is used for access to the class object to call other class methods or the constuctor.

There is also @staticmethod when access is not needed to class or instance objects.

Protocols

- Collection

- Container

- Hashtable

- Iterable

- Reversible

- Sequence

- Sized

Generators

- VIDEO

- https://www.youtube.com/watch?v=bD05uGo_sVI

- https://www.youtube.com/watch?v=vBH6GRJ1REM Python dataclasses will save you HOURS, also featuring attrs

generator: 1:04:30

dunders with Context Manager

“For repetitive set up and tear down, use Context Managers”. -VIDEO by Doug Mercer

When a client is used in Python code, it must be closed as well. Context manager is a language feature of Python that takes care of things when you enter and exit the context.

with open("myfile.txt", r) as f:

contents = f.read()

double underscores (“dunders”) before and after each name.

enter, exit,

init, repr, len, hash, add, sub,

and, reversed, contains, format, iter, call,

Magic methods getitem, len, etc. make you code look like it’s part of the library.

Make it Iterable.

context manager: 1:22:37

Secure coding

https://snyk.io/blog/python-security-best-practices-cheat-sheet/

-

Always sanitize external data

-

Scan your code

-

Be careful when downloading packages

-