bomonike

Overview

What is AI?

AI personality Bernard Marr identified four types of AI evolving:

-

“reactive” machines (such as Spam filters and the Netflix recommendation engine) are not able to learn or conceive of the past or future, so it responds to identical situations in the exact same way every time.

-

“limited memory” AI absorbs learning data and improve over time based on its experience, using historical data to make predictions. It’s similar to the way the human brain’s neurons connect. Deep-learning algorithms used in ChatGPT widely released in 2022 is the AI that is widely used and being perfected today.

-

“theory of mind” is when AI acquires decision-making capabilities equal to humans, and have the capability to recognize and remember emotions, and adjust behavior based on those emotions. This was realized in 2025 with “reasoning” and “Chain of Thought” capabilities in LLM models.

-

“self-aware”, also called artificial superintelligence (ASI), is “sentient” understanding of of its own needs and desires.

Stages of AI

The 10 stages of AI capabilities: VIDEO:

- Programmatic (such as code in Python, Java, C#, programs) referencing rules (even dynamic rules)

-

Discriminative Intelligence that discriminates between win/loss, faulty/non-faulty, herb/tree, living/non-living, etc. Such AI systems also perform tasks like classification, clustering, and regression analysis. Created by “content-based and Retention” systems (adapts: learns from data: RNN, LSTM, etc.).

- Narrow domain or Expert AI systems (on specific tasks: Watson, DeepMind, AlphaGo)

- Reasoning AI systems (simulates human reasoning on self-driving cars)

-

Self-aware AI systems (reflects on internal states: introspection)

- Artificial General Intelligence (AGI) human-like intelligence - flexibility at various activities

- Artificial Super Intelligence (ASI)

- Transcendent AI systems (from book: Apocalyptic AI - merging with humans)

- Cosmic AI (extends beyond Earth)

- God-like AI (omnipresent, omniscient, omnipotent)

AI Industry Segments and Companies

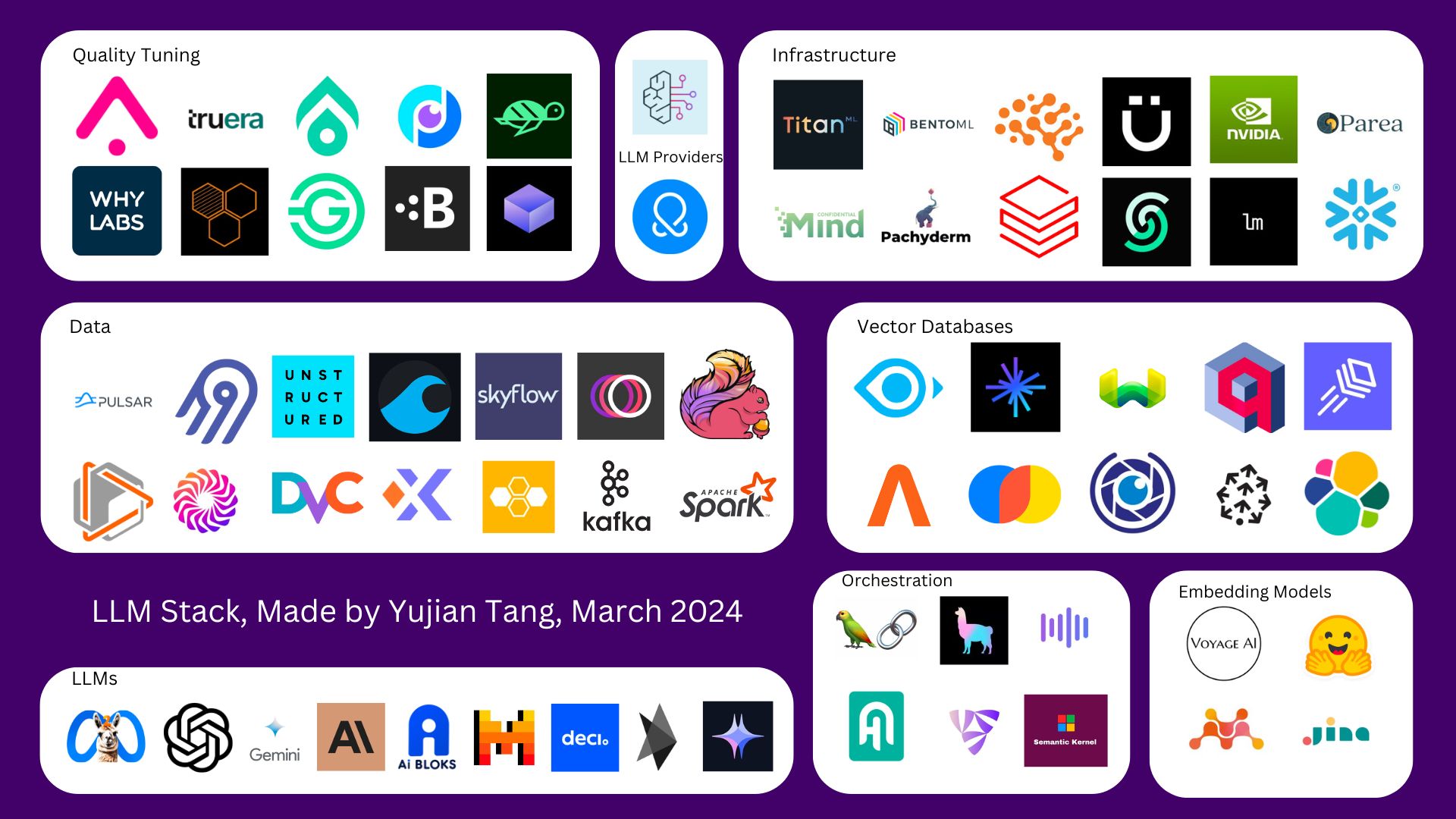

Yujian Tang’s list & chart on Medium lists these industry segments:

- Quality Tuning

- LLMs

- LLM Providers

- Infrastructure

- Data

- Vector Databases

- Orchestration

-

Embedding Models

- Sakana AI’s Transformer Squared self-learns new tasks

Makers of LLM Foundation Models:

- OpenAI

- Anthropic

- MAI (Microsoft AI)

- IBM

- AWS

Glossary

A B C D E F G H I J K L M N O P Q R S T U V W X Y Z

A

-

Ablation Study The process of removing components from an AI system to analyze their impact on performance. -

ACP = Agent Communication Protocol IBM’s Linux Foundation project to standardize RESTful API for managing and executing agents, supporting synchronous, asynchronous, and streaming interactions between AI Agents within their BeeAI (i-am-bee) and externally. -

AGI = Artificial General Intelligence A hypothetical AI system that can perform any intellectual task that a human can, with flexibility at various activities. Often considered the ultimate goal of AI research. -

ANI = Artificial Narrow Intelligence AI systems designed to perform a specific task, such as facial recognition or language translation, but not capable of general human-like intelligence. -

API = Application Programming Interface facilitates communication across applications. APIs help to extract and share data using a set of definitions and protocols. -

AIMS (Artificial Intelligence Management System) defined byhttps://www.iso.org/standard/81230.html $311 ISO/IEC Standard 42001:2023

B

-

Bias Systematic prejudice within AI algorithms or models, often resulting from biased training data. -

Bootstrapping Language-Image Pre-training (BLIP) An AI-based model, used to perform multi-modal tasks like visual question answering, image-text retrieval, and image captioning. It is a pre-training framework for unified vision-language understanding and generation. -

BlenderBot An AI-based chatbot that can converse naturally with people and takes direct feedback to improve its responses. -

BST = Belief State Transformer LLM from Microsoft AI can predict words before as well as after tokens, which enables planning and making decisions. Prefix and suffix tokens together make the Belief State.

C

-

Chatbot A computer program that simulates a human conversation with an end user. Though not all chatbots are equipped with artificial intelligence (AI), modern chatbots increasingly use conversational AI techniques like natural language processing (NLP) to make sense of the user’s questions and automate their responses. -

Chatbot Programs that simulate human-like conversations through text or voice interactions. -

ChatGPT A chatbot build developed by OpenAI that uses large language models (LLMs) to enable users to interact and get desired responses. -

CNN = Convolutional Neural Network A type of deep learning model used primarily for image recognition tasks. -

CodeT5 A text-to-code seq2seq model developed by Google AI, trained on a large data set of text and code. CodeT5 is the first pre-trained programming language model that is code-aware and encoder-decoder based. -

Cascading Style Sheets (CSS) A computer language to lay out and structure web pages using codes. -

Completion The generated output from a user prompt after process by a LLM. -

CoT = Chain-of-Thought A technique in AI, a form of reasoning, that involves breaking down a problem into smaller, more manageable parts, and then using these parts to solve the problem. -

CUA = Computer Using Agent OpenAI’s working AI agent Operator controlling your computer. Navigates software interfaces, executes tasks, and automates workflows. -

CUDA = Compute Unified Device Architecture A proprietary parallel computing platform & API developed by NVIDIA to enable high-performance computing on their GPUs.

D

-

Deep learning A type of machine learning focused on training computers to perform tasks through learning from data. It uses artificial neural networks. -

Distillation The technique of a student app scraping knowledge from a teacher LLM by asking millions of questions (what Deek Seek did to OpenAI’s LLM)

E

-

ENS = Ethereum Name Service * A decentralized naming protocol (defined by Vitalik Buterin in 2013 and launched in 2017 byNick Johnson and Alex Van de Sande) built on the Ethereum blockchain. ENS Registry smart contracts manages .eth domains’ TTL (Time To Live). Like DNS, ENS Resolver smart contracts translate 42-hex character cryptocurrency addresses into human-readable names (less error-prone). Names are auctioned. Tokens can be purchased on Coinbase, Binance Smart Chain (BSC), and SushiSwapa and Uniswap. -

ENS domains function as NFTs (Non-Fungible Tokens) and can be bought, sold, and traded. The system also has its own governance token, ENS, which allows holders to participate in the protocol’s decision-making process.

F

-

Falcon A large language model developed by the Technology Institute of Innovation (TII). Its variant, falcon-7b-instruct, is a 7-billion-parameter model based on the decoder-only model. -

Foundation models AI models with broad capabilities that can be adapted to create more specialized models or tools for specific use cases.

G

-

GAN = Generative Adversarial Network A type of generative model that includes two neural networks: generator and discriminator. The generator is trained on vast data sets to create samples like text and images. The discriminator tries to distinguish whether the sample is real or fake. -

Generative AI AI models capable of generating new content, such as images, music, or text, based on patterns learned from existing data. -

Generative AI models Models that can understand the context of input content to generate new content. In general, they are used for automated content creation and interactive communication. -

GPT = Generative Pre-trained Transformer A series of large language models developed by OpenAI designed to understand language by leveraging a combination of two concepts: Training and transformers. -

Google flan An encoder-decoder foundation model based on the T5 architecture. -

Gradio An open-source Python package that allows the building of a demo or web application for machine learning models. It helps to create UIs to demo and deploy models and share them easily. -

Groundedness The extent the response of a language model is factual – rooted, connected, or anchored in reality or a specific context (or “invented”). -

GRU = Gated Recurrent Unit A type of recurrent neural network that uses gating mechanisms to control the flow of information through the network.

H

-

Hugging Face An AI platform that allows open-source scientists, entrepreneurs, developers, and individuals to collaborate and build personalized machine learning tools and models. It provides a way to evaluate different models. -

HTML = HyperText Markup Language A standard markup language consists of elements that create web pages, structure them, and help display them. A start tag, some content, and an end tag define HTML elements.

I

-

IBM Watson An integrated AI and data platform with a set of AI assistants designed to scale and accelerate the impact of AI with trusted data across businesses. -

IBM Cloud Code Engine A fully managed, serverless platform that is used to manage and secure the underlying infrastructure of codes, container images, and batch jobs.

J

-

JEPA = Joint Embedding Predicting Architecture A macro architecture alternative instead of GPTs predicting next-word. It arranges modules running Transformers and other AI modules *

L

-

LLM = Large language model A deep learning model trained on substantial text data to learn the patterns and structures of language. They can perform language-related tasks, including text generation, translation, summarization, sentiment analysis, and more. -

Llama A large language model from Meta AI. -

LlamaIndex A flexible data framework to connect custom data sources to large language models using a central interface. -

LangChain A framework designed to simplify the creation of applications using LLMs that help in document analysis, document summarization, chatbot building, and code analysis. -

Lemmatization The process of reducing words to their root form. -

LIME = Local Interpretable Model Agnostic Explanation A metric to explain predictions by approximating the model in a local manner. A service provided by Amazon SageMaker Model Monitor. -

LPU = Language Processing Unit an application-specific integrated circuit (ASIC) from groq.com for high-performance inference efficiency of AI workloads using large language models (LLMs).

M

-

MAI = Microsoft AI Microsoft’s AI platform that provides tools and services for building and deploying AI applications. * -

MFU = Model FLOPS Utilization a metric to measure the efficiency of a model in terms of the number of floating-point operations (FLOPs) it can perform. -

ML = Machine Learning a subset of AI that helps make it possible for computers to learn from data, identify patterns, and improve their performance over time. -

MLA = Multi-head Latent Attention a method of attention that allows the model to focus on different parts of the input data at different times. -

MoE = Mixture-of-Experts -

N

-

NLP = Natural Language Processing The ability of AI systems to understand, interpret, and generate human language. A subset of artificial intelligence that enables computers to understand, manipulate, and generate human language (natural language). -

NER = Named-Entity Recognition A subtask of information extraction that helps to locate and classify named entities like first and last names, geographic location, age, address, and phone number in unstructured data sources.

O

-

OpenAI Whisper An automatic speech recognition system trained on 680,000 hours of supervised data that can transcribe speech in several languages.

P

-

Perception AI’s ability to receive and interpret information through computer vision, speech recognition, and other sensor-based inputs. -

Python A high-level, general-purpose programming language that supports multiple programming paradigms, including structured, object-oriented, and functional programming. -

PIL = Python Imaging Library A versatile Python library that adds image processing capabilities to Python interpreter that helps to perform tasks such as reading, rescaling, and saving images in different formats. -

PPO = Proximal Policy Optimization An actor-critic algorithm from OpenAI that looks at how much better than average were the action taken. * -

PTX = Parallel Thread eXecution virtual instruction set hardware-agnostic achitecture operating under NVIDIA’s CUDA parallel execution framework, used by DeepSeek. VIDEO

Q

-

Quantization the process of mapping continuous signals into discrete digital values. Used in digital signal processing, data compression, and machine learning.

R

-

RAG = Retrieval-Augmented Generation an AI framework designed to retrieve facts from an external knowledge base to ground large language models (LLMs) that provide information on the latest research, statistics, or news to generative models. -

RL = Reinforcement Learning A type of machine learning that helps agents learn by trial and error, receiving rewards or penalties based on their actions. -

RLVR = Reinforcement Learning with Verifiable Rewards reinforcement learning framework used by Tulu LLMs that builds upon supervised finetuning (SFT) by using simple functions to provide a deterministic correctness criteria binary ground truth signal to indicate whether a model’s output meets a predefined correctness criterion. This allows subject matter experts to establish clear correctness criteria without deep machine learning expertise. -

RNN = Recurrent Neural Network A type of neural network that processes sequential data by maintaining a hidden state that allows it to remember information from previous inputs (sequential time-based data such as stock prices, speech). -

RPL = Reward-Plus-Loss A training method that uses both positive and negative rewards to improve the performance of a model. -

RRF = Reciprocal Rank Fusion a rank aggregation method used in hybrid search systems to combine results from multiple search algorithms (like vector search and full-text search) into a single, unified ranking, prioritizing documents that consistently rank highly across different sources.

S

-

SFT = supervised fine-tuning - -

Sequence to sequence models A model type that uses both encoders and decoders. -

Sentiment analysis A process of analyzing digital text to determine the emotional tone of a message. Performed on textual data, helping businesses monitor brands through customer feedback. -

SHAP = Shapely Additive Explanations A feature contribution to individual predictions. A service provided by Amazon SageMaker Model Monitor. -

SLAM = Simultaneous Localization And Mapping A process of simultaneously determining a robot’s location and mapping its environment, performed locally by a robot. -

Streamlit An open-source framework to build and share machine learning and data science web apps. It turns data scripts into shareable web apps in minutes.

T

-

Temperature For a deterministic behavior (variation from content) the desirable value is zero. -

TensorFlow * A leading open-source library from Google for developing and deploying machine learning applications. It makes use of GPUs for parallel matrix operations for algebraic calculations. -

Tokenizer A tokenizer is a tool in natural language processing that breaks down text into smaller, manageable units (tokens), such as words or phrases, enabling models to analyze and understand the text. -

Tokenomics The process of tokenizing, storing, and trading tokens on a blockchain. -

Training data Data (generally, large data sets that also have examples) used to teach a machine learning model. -

Transformers A deep learning architecture that can generate coherent and contextually relevant text. The transformer model differs from other models in that it processes data in parallel, resulting in much quicker training times and a solution that can be scaled on modern hardware. Inputs to Encoders (such as BART) uses bi-directional to generate embeddings of context for unidirectional Decoders (such as GPT) to integrate with known knowledge to generate a response. -

Text generation A model that is trained on code from scratch that helps to automate repetitive coding tasks.

U

-

Unsupervised learning A subset of machine learning and artificial intelligence that uses algorithms based on machine learning to analyze and cluster unlabeled data sets. These algorithms can discover hidden patterns or data groupings without human intervention.

V

-

VAE = Variational Autoencoder A generative model that is a neural network model designed to learn the efficient representation of input data by encoding it into a smaller space and decoding it back to the original space.

W

-

watsonx.ai A studio of integrated tools for working with generative AI capabilities powered by foundational models and building machine learning models. -

watsonx.data A massive, curated data repository that can be used to train and fine-tune models with a state-of-the-art data management system. -

watsonx.governance A toolkit to direct, manage, and monitor an organization’s AI activities.

X

-

XAI = Explainable AI needed for trust of the system.

XYZ

None

Other AI Glossaries:

-

https://docs.anthropic.com/en/docs/resources/glossary

Workflow

https://www.youtube.com/watch?v=WZeZZ8_W-M4&t=10230s Machine Learning Workflow

Explainable AI

Algorithmic Accountability Act

https://www.linkedin.com/learning/learning-xai-explainable-artificial-intelligence/

Legal reasons include:

-

Article 22 (“Right to explanation”) GDPR (General Data Protection Regulation) for EU citizens worldwide states: “The data subject shall have the right to not be subject to a decision based solely on automated processing, including profiling, which produces legal effects concerning him or her or similarly significantly affects him or her. The data controller shall implement suitable measures to safeguard the data subject’s rights and freedoms and legitimate interests, at least the right to obtain human intervention on the part of the controller, to express his or her point of view and to contest the decision.”

-

U.S. Equal Credit Opportunity Act of 1974

-

France - Digital Republic Act of 2016

Current, XAI techniques:

-

post-hoc (after decision is made) by adding perturbing inputs to identify impact to outputs.

LIME (Local Interpretable Mode-Agnostic Explanations) which is model-agnostic. -

Identify the input data that led to the prediction.

RETAIN (Reverse Time Attention) model was developed at the Georgia Institute of Technology to identify which clinical data led to the prediction of heart failure. -

Work backwards through the neural net to find the most relevant input values.

LRP (Layer-wise relevance propagtion)

ux-glossary2.csv

References

- TheAIGRID 29M views in 2024 for $216K

- AI Revolution YouTube channel 20M views in 2024 for $138K

- VIDEO: Understanding AI Jargon - Your Ultimate Cheat Sheet by

- The AI Advantage by Igor P. from Pertugal sells $97/month community

- Matthew Berman

- Prompt Engineering does deep dive tech

- Wes Roth

- Julia McCoy

- Alex Kantrowitz “Google DeepMind CEO Demis Hassabis: The Path To AGI, Deceptive AIs, Building a Virtual Cell”

- Bloomberg Live

- CNBC Television at Davos: “Scale AI CEO Alexandr Wang on U.S.-China AI race: We need to unleash U.S. energy to enable AI boom”

- Fireship

- Stefan Bauschard from Education Disrupted: Teaching and Learning in An AI World

How to make videos:

- Invideo

- https://www.youtube.com/watch?v=OdUbl_2H8Pg Study with me

- https://www.youtube.com/watch?v=9Vf31JTzRKk Steven Thompson

Certifications

CompTIA AI Product Roadmap of certifications around AI:

- AI Essentials

- Sec AI+ for Security Engineers

- PenTest AI+ for Penetration Testers

- CySA AI+ for Security Analysts

- Data AI+ for Data Analysts

- AI SysOp+ for Systems Operations

- AI Scripting+ for Tech Support, Network Operations

- AI Architect+ for AI Systems Architects

- AI Prompt+ for GenAI Prompt Engineers

Intro to AI course

Here are the topics from the semester-long class offered by Montanta Digital Academy:

Module 01 - What is Artificial Intelligence?

- Introduction to What Is Artificial Intelligence?

- Blast From the Past

- Ingenious!

- What Does It All Mean?

- Keep It Real

- Seeing Is Believing

- Lessons Learned

Module 02 - Data-Driven

- Introduction to Data Driven

- Is Your Machine Smarter Than a Fifth Grader?

- It Just Makes Sense

- It’s A Numbers Game

- Representation for Reasoning

- Ability to Learn

- Decide with Data

- Domain Knowledge

Module 03 - Dream It, Design It, Apply It!

- Introduction to Dream It, Design It, Apply It!

- AI Agents

- Good Behavior

- Ethics in AI

- Deepfakes

- Developer Responsibility

v025 ACP :ai-glossary.md

Words that AI people use.

Words that AI people use.