bomonike

This article describes a significant advancement in computer science, when

AWS’s “Automated Reasoning” service was

announced

during their December, 2024 RE:Invent conference.

This article describes a significant advancement in computer science, when

AWS’s “Automated Reasoning” service was

announced

during their December, 2024 RE:Invent conference.

VIDEO: “Amazon’s NEW AI Just Solved The HARDEST Programming Problem” by Matthew Berman

That would be hallucinations, where the AI is making stuff up, albeit based on the likelihood in what it already knows. Generative AI makes use of “Deep learning” techniques to make predictions based on applying statistical models to large datasets (Large Language Models).

If we quibble about definitions, one definition of AI is the ability of computers to appear to behave like humans. Thus, the Turing test is about a conversation.

Automated reasoning is a specific discipline of artificial intelligence (AI) that applies logical models and proofs to reason about the possible behaviors of a system or program, including states it can or will never reach.

While machine learning makes predictions and inferences. Automated reasoning provides proof from Logical deductions.

AWS Automated Reasoning for Provable Security

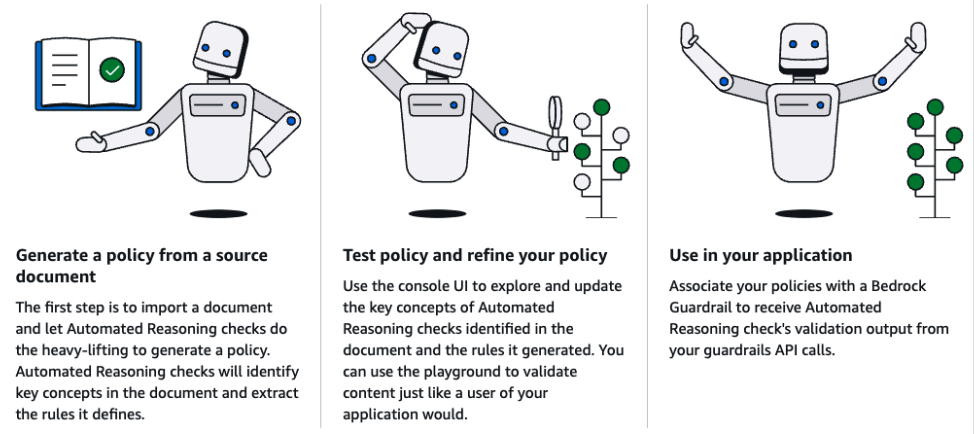

The AWS AI demo turns a written HR policy (Leave of Absence) into FMs (Foundational AI LLM Models).

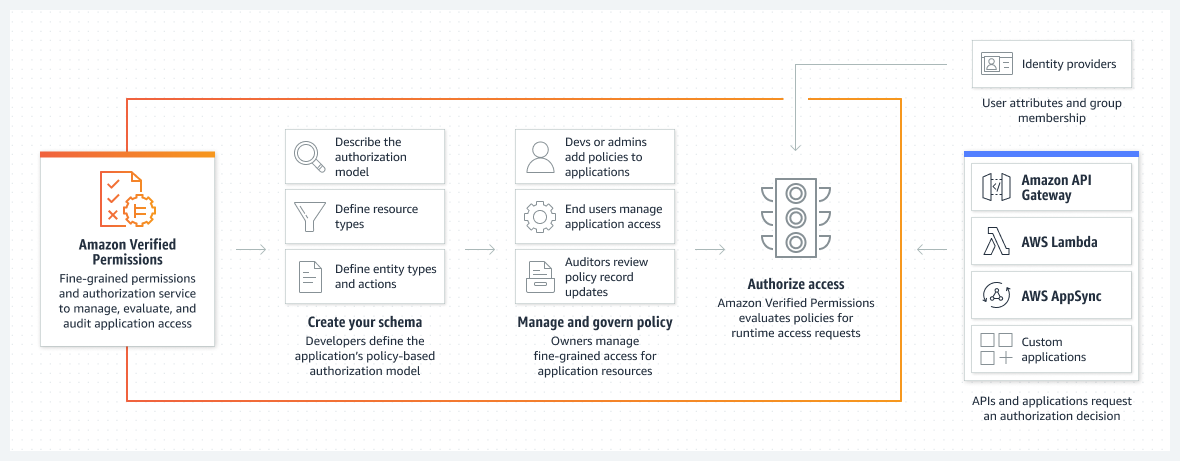

Amazon’s’ “Automated Reasoning” they categorize under “Security, Identity, & Compliance”.

-

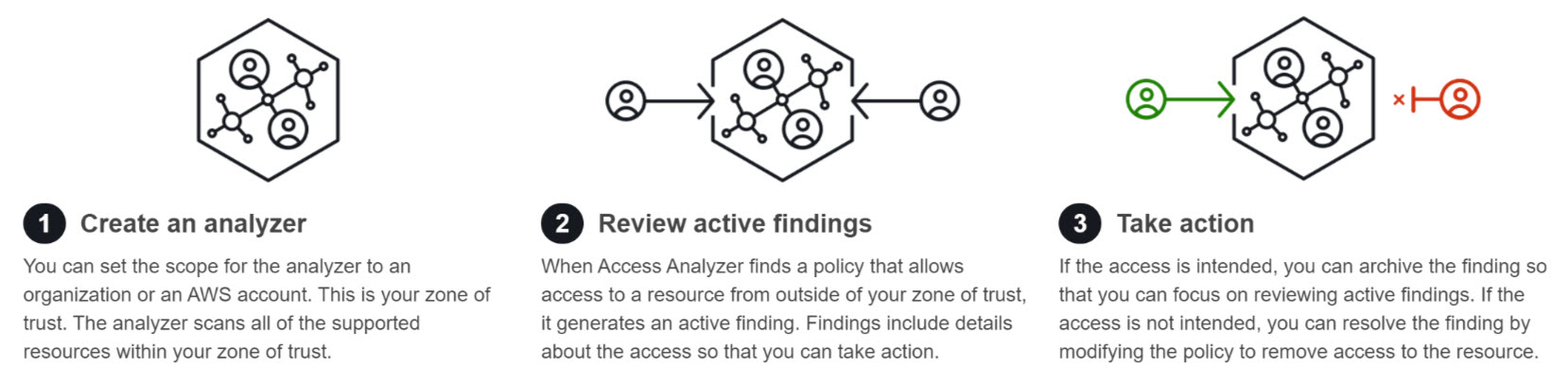

AWS Identity and Access Management (IAM) Access Analyzer ensures fine-grained least-privilege permission with automated reasoning.

This pdf

is the academics of SMT (Satisfiability Modulo Theories) solvers and the “ZELKOVA” policy analysis engine. The SMT encoding uses the theory of strings, regular expressions, bit vectors, and integer comparisons.

This pdf

is the academics of SMT (Satisfiability Modulo Theories) solvers and the “ZELKOVA” policy analysis engine. The SMT encoding uses the theory of strings, regular expressions, bit vectors, and integer comparisons. -

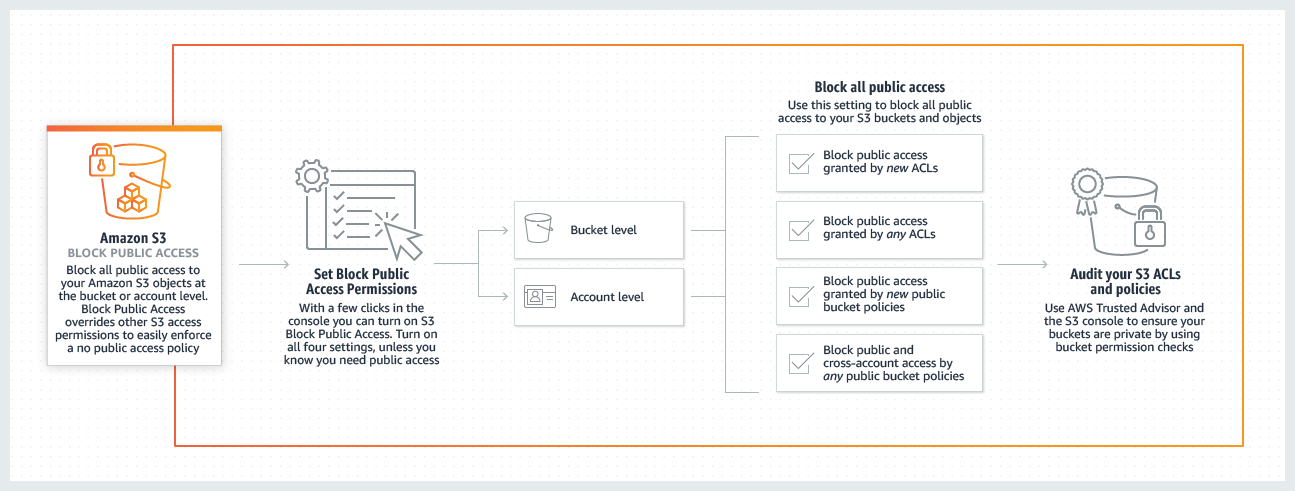

Amazon S3 Block Public Access

based on Reachability analysis

based on Reachability analysis -

Amazon Inspector Classic has a Network Reachability feature that automatically analyses your EC2 instances for potential security vulnerabilities and misconfigurations.

-

Amazon Virtual Private Cloud (Amazon VPC) Reachability Analyzer applies automated reasoning to visualize AWS networks.

AWS also calls it “Provable Security”.

Automated Reasoning is for more than security:

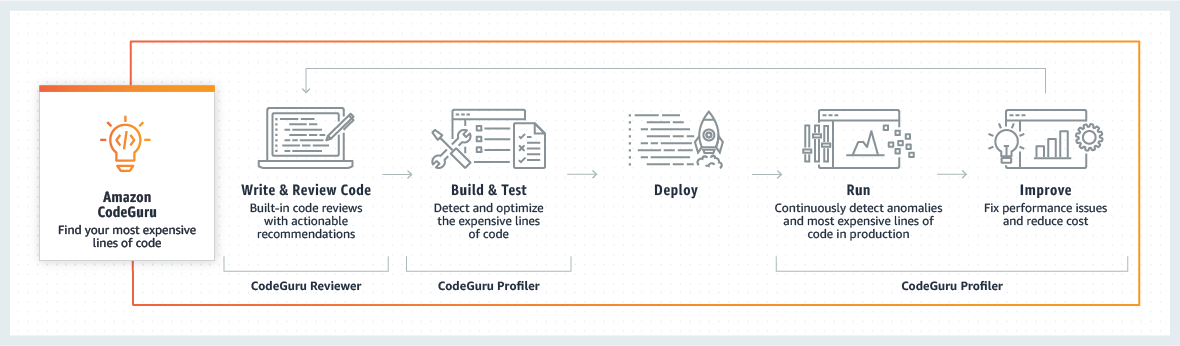

- Amazon CodeGuru Reviewer uses automated reasoning and machine learning to help developers identify software vulnerabilities.

Explainable AI!

The fancy trick is NOT that a service is able to answer questions (using GenAI).

The big deal is this: For the first (well-marketed) time, an AI system can point to explanations, which the EU has mandated for AI systems to explain the exact rules and variables it used to make a determination.

The big deal is Explainable AI.

How?

Amazon Bedrock Guardrails “evaluates prompts and model responses for Agents, Knowledge Bases, and FMs in Amazon Bedrock and self-managed or third-party FMs (Foundation Models).”

- Configure thresholds to filter undo

OBSERVE: The prompt to do this work (turn a pdf into Rules, Variables) AWS calls the “intent”.

contextual grounding.

OBSERVE: This service makes AI explainable.

The potential of this method is its applicability to Laws, Contracts, Agreements, Patents, Scientific White Papers/Peer Review, and a whole host of other written documents claiming something is true or false based on the Data.and Evidence.